The World of Reality, Causality and Real Artificial Intelligence: Exposing the Great Unknown Unknowns

The World of Reality, Causality and Real Artificial Intelligence: Exposing the Great Unknown Unknowns

"All men by nature desire to know." - Aristotle

"He who does not know what the world is does not know where he is." - Marcus Aurelius

"If I have seen further, it is by standing on the shoulders of giants." - Isaac Newton

"The universe is a giant causal machine. The world is “at the bottom” governed by causal algorithms. Our bodies are causal machines. Our brains and minds are causal AI computers". - Azamat Abdoullaev

Introduction

The 3 biggest unknown unknowns are described and analyzed in terms of human intelligence and machine intelligence.

It is the best ideas, constructs, or concepts humans ever created:

- World [Reality, Being, Existence, Universe; the World Systems];

- Causality [Causation, or Cause-Effect Relationship; Mechanisms, Processes, Rules, Laws];

- Intelligence [Mind, Natural Intelligence, Machine Intelligence, AI, Artificial, Automated, Autonomous, Automatic Intelligence, Machine Learning, Deep Learning].

A deep understanding of reality and its causality is to revolutionize the world, its science and technology, AI machines including.

The content is the intro of Real AI Project Confidential Report: How to Engineer Man-Machine Superintelligence 2025: AI for Everything and Everyone (AI4EE).

Real AI is designed as a true AI, a new generation of intelligent machines, that simulates/models/represents/maps/understands the world of reality, as objective and subjective worlds, digital reality and mixed realities, its cause and effect relationships, to effectively interact with any environments, physical, mental or virtual.

It overrules the fragmentary models of AI, as narrow and weak AI vs. strong and general AI, statistic ML/DL vs. symbolic logical AI.

Universal Artificial Intelligence, and how much might cost Real AI Model

What are Unknown Unknowns?

The scope and scale of the world as the entity of entities, the totality of totalities, the system of systems, or the network of networks, as represented with human minds and machine mentality, may be determined by the relationships among several key knowledge domains:

what man/machine knows, aware and understand;

what man/AI does not know, or what man/AI does not like to know, aware but don't understand;

what man/AI cannot know, understand but not aware of

what man/AI does not know what it does not know, neither understand nor aware of

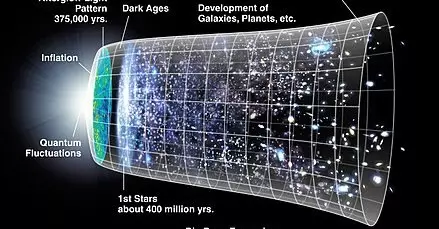

It is all a power set of {known, unknown; known unknown}, known knowns, known unknowns, unknown knowns, and unknown unknowns, like as the material universe's material parts: about 4.6% of baryonic matter, about 26.8% of dark matter, and about 68.3% of dark energy.

The World as a Whole as the Greatest Unknown Unknowns

There are a big number of sciences, all sorts and kinds, hard sciences and soft sciences. But what we are still missing is the science of all sciences, the Science of the World as a Whole, thus making it the biggest unknown unknowns. It is what man/AI does not know what it does not know, neither understand, nor aware of its scope and scale, sense and extent.

Some best attempts to define the world could be found in philosophy/metaphysics, as

“the universe consists of objects having various qualities and standing in various relationships” (Whitehead, Russell),

“the world is the totality of states of affairs” (D. Armstrong, A World of States of Affairs) ,

"World of physical objects and events, including, in particular, biological beings; World of mental objects and events; World of objective contents of thought" (K. Popper, the model of three interacting worlds), etc.

How the world is still an unknown unknown one could see from the most popular lexical ontology, WordNet,see supplement.

The construct of the world is typically missing its essential meaning, "the world as a whole", the world of reality, the ultimate totality of all worlds, universes, and realities, beings, things, and entities, the unified totalities.

The world or reality or being or existence is "all that is, has been and will be". Of which the physical universe and cosmos is a key part, as "the totality of space and times and matter and energy, with all causative fundamental interactions". String theory predicts an enormous number of potential universes, of which our particular universe or cosmos (with its particles and four fundamental forces of nature, gravity, the weak force, electromagnetism, the strong force, governing everything that happens in the universe) represents only one.

SCIENCE AND TECHNOLOGY XXI: New Physica, Physics X.0 & Technology X.0

In all, the world is the totality of all entities and relationships, substances (actual or mental), structures (actual and conceptual), states (actual or mental) changes and processes (past, present and future), relationships (actual and conceptual) or phenomena, whether observable or not, actual, mental, digital or virtual.

This includes as really existing physical objects, immaterial things, unobservable entities posited by scientific theories like dark matter and energy, minds, God, numbers and other abstract objects, all the imaginary objects as abstractions, literary concepts, or fictional scenarios, virtual and digital objects, the internet, cyberspace, multiverses, metaverses, and all possible worlds. As to scientific realism, they all are parts of the real world having some ontological status or certain causal power.

Our world conception encompasses the WordNet's and ImageNet's entity, "that which is perceived or known or inferred to have its own distinct existence (living or nonliving)", with all its content and classifications.

As it is systematically and consistently presented in Universal standard entity classification system [USECS] for human minds and machine intelligence:

The World Entities global reference

The Intelligent Content for iPhones, X.0 Web, Future Internet and Smart People

The Language of the World of Things;

THE CATALOG OF THE WORLD; THE WORLD CATALOGUE OF SUBSTANCES; THE WORLD CATALOGUE OF STATES

THE WORLD CATALOGUE OF CHANGES; THE WORLD CATALOGUE OF RELATIONS

THE ENCYCLOPEDIC KNOWLEDGE REFERENCE; SMART KNOWLEDGE WEB;

INTELLIGENT INTERNET OF EVERYTHING

https://www.slideshare.net/ashabook/universal-standard-entity-classification-system-usecs

There are few basic levels of the world of reality, material, ideal and mixed:

- material reality or material world of material, actual, natural, physical or concrete entities;

- mental reality or mental world of subjective, personal realities of mental entities;

- social reality or social world of social entities;

- information reality or information world of information entities;

- digital reality, digital world of digital entities, cyberspace, Internet, Net, a worldwide network of computer networks for data transmission and exchange;

- virtual reality, virtual world of virtual entities, simulated world;

- intelligent reality, intelligent world of intelligent entities, a worldwide network of computer networks, smart things, human minds and AI systems, or I-World

As such, the World of Reality is Actuality - Mentality - Virtuality Continuum

There is no such thing as a mixed reality (MR), is a hybrid of reality and virtual reality, but a mixed actuality, the merging of physical and virtual worlds producing new environments and visualizations, where physical and digital objects co-exist and interact in real time.

There is no such thing as an augmented reality (AR), but an augmented actuality (AA), an interactive experience of a physical environment where the objects that reside in the actual world are enhanced by computer-generated perceptual information, as across multiple sensory modalities, visual, auditory, haptic, somatosensory and olfactory.

There is no such thing as a computer-mediated reality, but a computer-mediated actuality, which refers to the ability to manipulate one's perception of actuality through the use of a digital technology, a wearable computer or a smartphone.

There is no such thing as a simulated reality, but a simulated actuality, that actuality, physicality, or materiality could be simulated—as by quantum computer simulation—to a degree indistinguishable from "true" actuality, as in human dreams.

'METAVERSO', the sum of all virtual worlds, augmented reality, and the Internet.

Then conscious minds may or may not know that they are inside a natural simulation, a mentally generated world, as if created by the evil demon, also known as Descartes' demon, malicious demon and evil genius, and described as the Transformation of Things.

Again, the essence of Reality, its Actuality, Mentality and Virtuality, is Causality or Causation, the second biggest unknown unknowns.

Reality, Universal Ontology and Knowledge Systems: Toward the Intelligent World

The World of Causality as a Great Unknown Unknowns

All the world's knowledge derives from causation driving the world as the engine of the universe.

Humans develop an ability to understand causality, causal power and mechanisms, making inferences and predictions and forecasting based on cause and effect, at an early age. Understanding causality is equal to a deep learning about the world, its mechanisms, forces, processes, phenomena, laws and rules and the behavior of things. There is an increasing awareness how the understanding of causality revolutionizes science and the world [The Book of Why: The New Science of Cause and Effect].

It defies any common knowledge and understanding, but the real nature and mechanisms of cause-effect relationships is still the greatest unknown known. Still we are completely unaware that we are unaware of its major features

- what kind of entity can be a cause and what kind of entity can be an effect

- what kind of relationship can be causality, an asymmetric or symmetric relation

Due to process philosophy, ontology of becoming, or processism, it is now a known unknown that "every cause and every effect is respectively some process, event, becoming, or happening"

But if "A is the cause and B the effect" or "B is the cause and A the effect" is true is the unknown unknown.

A standard narrative of causality is wrong and misleading the following:

"Causal relationships suggest change over time; cause and effect are temporally related, and the cause precedes the outcome. It is a unidirectional relationship between a cause and its effect [the final consequence of a sequence of actions or events expressed qualitatively or quantitatively, the result, the output]. All events are determined completely by previously existing causes, known as causal determinism.

Causality (causation, or cause and effect) is influence by which one event, process, state or object (a cause) contributes to the production of another event, process, state or object (an effect) where the cause is partly responsible for the effect, and the effect is partly dependent on the cause. In general, a process has many causes, which are also said to be causal factors for it, and all lie in its past. An effect can in turn be a cause of, or causal factor for, many other effects, which all lie in its future".

Law of Universal Causation

The law, principle, or rule of universal causation is generally asserted arbitrarily, in every loose ways, namely:

"everything in the universe has a cause and is thus an effect of that cause"

"every change in nature is produced by some cause"

"every effect has a specific and predictable cause"

"the universal law of successive phenomena is the Law of Causation"

"every event or phenomenon results from, or is the sequel of, some previous event or phenomenon, which being present, the other is certain to take place"

"relationships where a change in one variable necessarily results in a change in another variable"...

"relation between two temporally simultaneous or successive events when the first event (the cause) brings about the other (the effect)".

Causation could be an example of a “regularity” analysis, counterfactual analysis, manipulation analysis, statistical analysis, or probabilistic analysis.

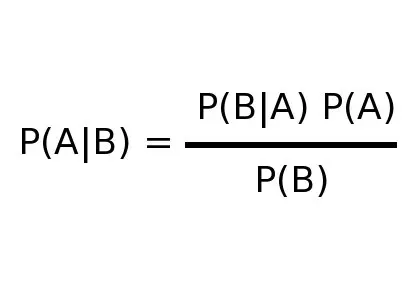

A real and true causality MUST be a symmetrical, circular, bidirectional and reversible productive relationship, like as formalized by Bayes’ rule/theorem/law, describings the probability of an event, based on prior knowledge of conditions that might be related to the event:

P(X/Y) P(Y) = P (Y/X) P (Y)

By its very design, Bayes’ rule/theorem/law is essentially about Real Causality, or Interactive Causation, Circular Causality, modeling the Universal Law of Causal Reversibility in probabilistic terms.

It is a basic law in real statistics and causal probability theory. In statistical classification, two main approaches are commonly called the generative approach and the discriminative approach, with generative classifiers ("generate" random instances (outcomes), a model of the conditional probability of the observable X, given a target Y =y, or joint distribution) and discriminative classifiers (conditional distribution or no distribution).

Given a model of one conditional probability, and estimated probability distributions for the variables X and Y, denoted P(X) and P(Y), one can estimate the opposite conditional probability using Bayes' rule:

P(X|Y)P(Y)=P(Y|X)P(X)

For example, given a generative model for P(X|Y), one can estimate:

P(Y|X)=P(X|Y)P(Y)/P(X)

And given a discriminative model for P(Y|X), one can estimate:

P(X|Y)=P(Y|X)P(X)/P(Y)

Both types of classifiers or models or learning or algorithms, generative and discriminative, are covering such types of models, as Hidden Markov Model (HMM), Bayesian network (e.g. Naive bayes, Autoregressive model) or Neural networks, all underpinned by direct and reversed causal processes, X > Y and Y > X.

And statistic models are known for their wide applications to statistical physics, thermodynamics, statistical mechanics, physics, chemistry, economics, finance, signal processing, information theory, pattern recognition (speech, handwriting, gesture recognition, part-of-speech tagging, etc.), AI, ML, DL and bioinformatics.

Twentieth century definitions of causality derive upon statistics/probabilities/associations. One event (X) is said to cause another if it raises the probability of the other (Y):

P (Y/X) > P (Y)

Then a real Bayes' network is a causally reversible probabilistic network, presented as a probabilistic graphical model representing a set of causal variables and their conditional dependencies via a bi-directional cyclic graph (DCG). It can model any stochastic processes and random phenomena in the world.

For example, a real Bayesian network could represent the probabilistic causal relationships between diseases and symptoms, and vice versa. Given symptoms, the network can be used to compute the probabilities of the presence of various diseases. Given diseases, the network can be used to compute the probabilities of the presence of various symptoms.

It embraces as a special case a Bayesian network (belief network, or decision network) as a probabilistic graphical model that represents a set of variables and their conditional dependencies via a directed acyclic graph (DAG).

So, it is still a big unknown that a [true and real] causality is a sufficient and necessary relationship between cause and effect, or change in a cause changes an effect, and vice versa.

Cause effects Effect, if and only if the Effect inversely causes the Cause

Or, X causes, affects, influences, produces, or changes Y, if and only if Y affects (produces) X.

Changes in one variable X cause changes in others Y, IFF changes in Y cause changes in X.

The Principle of Reversible Causation (PRC) implies the structural causal models of reality operating with the most substantial general statements and observations about it.

All things act on and react upon by means of causal mechanisms and causal pathways. All entities receive and respond to stimuli from their environments. All organisms receive/detect/perceive and react/respond to stimuli from their environments. All cells receive and respond to signals from their surroundings/micro-environments. And signaling agents could be physical agents, chemical agents, biological agents, as antigens, or environmental agents, as in the immune system.

Again, Popper's three worlds of reality involving three interacting worlds, called world 1, world 2 and world 3, are subject to the PRC.

World 1: the world of physical objects and events, including biological entities

World 2: the world of individual mental processes, the world of subjective or personal experiences

World 3: the world of the products of the human mind, having an effect back on world 2 through their representations in world 1.

It is like world 3 as a world of objective knowledge (languages, songs, paintings, mathematical constructions, theories, culture) is CAUSALLY acting on world 1 through world 2, having a CAUSAL effect back on world 2 through their representations in world 1.

Popper strongly advocates not only the existence of the products of the human mind, but also their being real rather than fictitious. As long as these have a causal effect upon us, they ought to be real. Products of the human mind, for example scientific theories, have proven to have an impact on the physical world by changing the way humans build things and utilize them. Popper believes that the causal impact of world 3 is more effective than scissors and screwdrivers (Popper, K. R. (1978). Three worlds. The Tanner Lecture on Human Values. The University of Michigan. Ann Arbor).

It is most critical to recognize the scope and scale of an interactive [cause-effect] relationship and how it differs from a non-causal relationship, link, or correlation, spatial contiguity or temporary sequence.

The Universal Law of Causal Reversibility: the Case of Fire and Smoke

Let's look at a common sense knowledge of linear causality, and how it is primitive, wrong and confusing: "Fire causes smoke". It is true, if only the reverse effect is true, "Smoke causes fire". This kind of an argument was enough for the most people, including researchers, to reject the universal law of causal reversibility.

Not so fast! Look deeper, and always go to the real causes and effects. Such level of understanding of a cause-effect relationship was normal for the ancient people relying on their sensing and observations.

What is the meaning of fire?

"A state, process, or instance of combustion in which fuel or other material is ignited and combined with oxygen, giving off light, heat, and flame. A burning mass of material, as on a hearth or in a furnace. the destructive burning of a building, town, forest, etc.; conflagration".

First, fire is ignited. Second, fire produces energy/heat, light and the exhaust products, including the smoke by-product, depending on what is burnt, wood, plastic, gas, oil or metal.

There is the triangle of the three elements to ignite any fire: heat, fuel, and an oxidizing agent (oxygen or water), as necessary and sufficient conditions, all must be present and combined in the right mixture. A fire can be intervened or manipulated (controlled, prevented or extinguished) by removing any one of the elements in the fire triangle.

The fire tetrahedron is adding up the chemical chain reaction, combustion, or burning, and the heat from a flame may provide enough energy to make the reaction self-sustaining. Combustion (fire) was the first controlled chemical reaction discovered by humans, and continues to be the main method to produce energy for humanity.

So, "fire causes smoke" is plain wrong; for a harmless fire is a smokeless fire. Fire causes heat, light and flame, and could involve smoke.

The Universal Law of Causal Reversibility states, "Heat or Light starts Fire". Fires occur naturally, ignited by lightning strikes or by volcanic products. "Light or Heat creates Fire" makes the most critical reversed causal information. Note the temporal lag of fire maintenance and manufacturing could be 200-300 k years, since the Peking man times.

REAL Causalism or Stochastic and Interactive Determinism

Causalism means "everything has a cause." Or, "nothing occurs at random, but everything for a reason and by necessity."

It can be extended to the idea that "every effect has a cause", "every event has a cause", and "every action has a reason".

Now, determinism means "every CHANGE, event or state of affairs, including every human decision and action, is the inevitable and necessary consequence of antecedent change or event or states of affairs".

This makes causalism more or less synonymous with Laplace's determinism that all events are completely determined by previously existing causes.

Or, as Laplace's Causal Intellect determines it: "We may regard the present state of the universe as the effect of its past and the cause of its future. An intellect which at a certain moment would know all forces that set nature in motion, and all positions of all items of which nature is composed, if this intellect were also vast enough to submit these data to analysis, it would embrace in a single formula the movements of the greatest bodies of the universe and those of the tiniest atom; for such an intellect nothing would be uncertain and the future just like the past would be present before its eyes". — Pierre Simon Laplace, A Philosophical Essay on Probabilities

It is merely a linear causalism or determinism, when "the present state of the universe is the effect of its previous state and the cause of the state that follows it ''. Or, as my great ancestor Omar Khayyam poetically expressed it: "And the first Morning of Creation wrote / What the Last Dawn of Reckoning shall read ''.

As a matter of fact, the laws of nature are causal laws and that the world of reality consists of networks of changes/events as causes and effects.

Since it is the essence of scientific research to seek out causes, to find causal explanations for all phenomena, scientists should rely on real causalism or interactive determinism in their investigations and work.

The AA Universal Principle of Causation or Causality is simply formulated as

X causes Y, IFF Y causes X

Causality is a symmetrical mathematical relationship: If X is related with Y, Y is related with X. Or, if C is the cause of E, E is the cause of C. Therefore, correlations could imply a cause and effect nexus.

Mathematically, Complete Causal Relation is a set of pre ordered pairs, triples, quadruples, and so on. A set of pre-ordered pairs is called a two-place (or dyadic) causal relation; a set of ordered triples is a three-place (or triadic) causal relation; and so on. In general, a causal relation is any set of ordered n-tuples of objects. The major logical properties of relations include symmetry, transitivity, and reflexivity.

Dependence, association or correlation, all imply causality.

Here are some critical examples from the hard science of physics and classical mechanics: Newton's laws of motion: The first law states that an object either remains at rest or continues to move at a constant velocity, unless it is acted upon by an external force.

The second law states that the rate of change of momentum of an object is directly proportional to the force applied; the net force acting upon an object is equal to the rate at which its momentum changes with time.

The third law states that all forces between two objects exist in equal magnitude and opposite direction: if one object A exerts a force F(A) on a second object B, then B simultaneously exerts a force F(B) on A, and the two forces are equal in magnitude and opposite in direction: F(A) = −F(B).

Mass–energy relation

According to Einstein's equation, E = mc2, mass can be transformed into energy and energy can be transformed into mass. This means that the total mass and energy before a reaction in a closed system equals the total mass and energy after the reaction. "Does the inertia of a body depend upon its energy-content?", 1905. In 1873 the Russian physicist and mathematician Nikolay Umov pointed out a relation between mass and energy for ether in the form of Е = kmc2, where 0.5 ≤ k ≤ 1.

The mass-energy [reversible causal] relationships are demonstrated in nuclear fission reactions and thermonuclear fusion reactions implying a great amount of energy can be released by the nuclear fission/fusion self-caused chain reactions used in both nuclear weapons and nuclear power.

Again, there is an example from public health/epidemiology, where causation means that the exposure produces the effect. Causative factors can be the presence of an adverse exposure, e.g., driving drunk, working in a coal mine, using illicit drugs, or breathing in secondhand smoke, or the absence of a preventive exposure, not wearing a seat belt, an old age, not exercising, etc.

To conclude that exposure to tobacco smoke and air pollution cause the occurrence of lung cancer, one needs to review the body of evidence suggesting a causal relationship, like considering temporality and spatiality, all possible chances, biases, and confounders, first considering a key retroactive criterion.

- Is it possible that having lung cancer causes one to smoke?

Or, an association between using recreational drugs (a cause/input/predictor/exposure) and poor mental well-being (an effect/output/prediction) is causal, IFF a reverse causation exists, people with poor mental well-being are to use recreational drugs.

Again, REAL Causalism universally implies that there is no production or generation, action or affection, conditioning or influence without some inverse effects. And it is regardless how one thing acts upon, modifies, affects, or influences another thing, by physical, mental, moral power.

As an example, we shape our technologies as much as the technologies shape us. We humans designed the telephone, but from then on the telephone influenced how we communicated, conducted business, and conceived of the world. We also invented the automobile, but then rebuilt our cities around automotive travel and our geopolitics around fossil fuels.

The Ladder of Reality, Causality and Mentality, Science and Technology, Human Intelligence and Non-Human Intelligence (AI)

Still, causation needs to be distinguished from mere dependence, association, correlation, or statistic relationship – the link between two variables, as in the AA ladder of CausalWorld:

- Chance, statistic associations, causation as a statistic correlation between cause and effect, correlations (random processes, variables, stochastic errors), random data patterns, observations, Hume's observation of regularities, Karl Pearson's causes have no place in science, Russell's “the law of causality” a “relic of a bygone age” /Observational Big Data Science/Statistics Physics/Statistic AI/ML/DL [”The End of Theory: The Data Deluge Makes the Scientific Method Obsolete”]

- Common-effect relationships, bias (systematic error, as sharing a common effect, collider)/Statistics, Empirical Sciences

- Common-cause relationships, confounding (a common cause, confounder)/Statistics, Empirical Sciences

- Causal links, chains, causal nexus of causes and effects (material, formal, efficient and final causes; probabilistic causality, P(E|C) > P(E|not-C), doing and interventions, counterfactual conditionals,"if the first object had not been, the second had never existed", linear, chain, probabilistic or regression causality)/Experimental Science/Causal AI

- Reverse, reactive, reaction, reflexive, retroactive, reactionary, responsive, retrospective or inverse causality, as reversed or returned action, contrary action or reversed effects due to a stimulus, reflex action, inverse probabilistic causality, P(C|E) > P(C|not-E), as in social, biological, chemical, physiological, psychological and physical processes/Experimental Science

- Interaction, real causality, interactive causation, self-caused cause, causa sui, causal interactions: true, reciprocal, circular, reinforcing, cyclical, cybernetic, feedback, nonlinear deep-level causality, universal causal networks, as embedded in social, biological, chemical, physiological, psychological and physical processes/Real Science/Real AI/Real World/the level of deep philosophy, scientific discovery, and technological innovation

The Six Layer Causal Hierarchy defines the Ladder of Reality, Causality and Mentality, Science and Technology, Human Intelligence and Non-Human Intelligence (AI).

The CausalWorld [levels of causation] is a basis for all real world constructs, as power, force and interactions, agents and substances, states and conditions and situations, events, actions and changes, processes and relations; causality and causation, causal models, causal systems, causal processes, causal mechanisms, causal patterns, causal data or information, causal codes, programs, algorithms, causal analysis, causal reasoning, causal inference, or causal graphs (path diagrams, causal Bayesian networks or DAGs).

It fully reviews a causal graph analysis having a critical importance in data science and data-generated processes, medical and social research and public policy evaluation, statistics, econometrics, epidemiology, genetics and related disciplines.

The CausalWorld model covers Pearl's statistic linear causal metamodel, as the ladder of causation: Association (seeing/observing), entails the sensing of regularities or patterns in the input data, expressed as correlations; Intervention (doing), predicts the effects of deliberate actions, expressed as causal relationships; Counterfactuals, involves constructing a theory of (part of) the world that explains why specific actions have specific effects and what happens in the absence of such actions.

It must be noted that any causal inference statistics or AI models relying on "the ladder of causality" [The Book of Why: The New Science of Cause and Effect] are still fundamentally defective for missing the key levels of real nonlinear causality of the Six Layer Causal Hierarchy.

It subsumes causal models (or structural causal models) as conceptual modelling describing the causal mechanisms of causal networks, systems or processes, formalized as mathematical models representing causal relationships, an ordered triple {C, E, M} , where C is a set of [exogenous] causal variables whose values are determined by factors outside the model; E is a set of [endogenous] causal variables whose values are determined by factors within the model; and M is a set of structural equations that express the value of each endogenous variable as a function of the values of the other variables in C and E.

The causal model allows intervention studies, such as randomized controlled trials, RCT (e.g. a clinical trial) to reduce biases when testing the effectiveness of the treatment-outcome process.

Besides, it covers such special things as causal situational awareness (CSA), understanding, sense-making or assessment, "the perception of environmental elements and events with respect to time or space, the comprehension of their meaning, and the projection of their future status".

The CSA is a critical foundation for causal decision-making across a broad range of situations, as the protection of human life and property, law enforcement, aviation, air traffic control, ship navigation, health care, emergency response, military command and control operations, self defense, offshore oil, nuclear power plant management, urban development, and other real-world situations.

In all, the AA ladder of CausalWorld with interactive causation and reversible causality covers all products of the human mind, including science, mathematics, engineering, technology, philosophy, and art:

- Science, Scientific method, Scientific modelling: Causal Science, Real Science

- Mathematics: Real, Causal Mathematics

- Probability theory and Statistics: Causal Statistics, Real Statistics, C-statistics

- Cybernetics 2.0 is engaged in the study of the circular causal and feedback mechanisms in any systems, natural, social or informational

- Computer science, AI, ML and DL, as Causal/Explainable AI, Real AI (RAI)

The Causal World of Science and Technology: Real Science vs. Fake Science: Science is a pseudoscience and vice versa

"The law of causation, according to which later events can theoretically be predicted by means of earlier events, has often been held to be a priori, a necessity of thought, a category without which science would not be possible." (Russell, External World p.179).

[Real] Causality is a key notion in the separation of science from non-science and pseudo-science, "consisting of statements, beliefs, or practices that claim to be both scientific and factual but are incompatible with the causal method". Replacing falsifiability or verifiability, the causalism principle or the causal criterion of meaning maintains that only statements that are causally verifiable are cognitively meaningful, or else they are items of non-science.

The demarcation between science and pseudoscience has philosophical, political, economic, scientific and technological implications. Distinguishing real facts and causal theories from modern pseudoscientific beliefs, as it is/was with astrology, alchemy, alternative medicine, occult beliefs, and creation science, is part of real science education and literacy.

REAL SCIENCE DEALS SOLELY WITH CAUSE AND EFFECT INTERRELATIONSHIPS, CAUSAL INTERACTIONS OF WORLD'S PHENOMENA

Science studies causal regularities in the relations and order of phenomena in the world.

The extant definitions out of real causation are no more valid, outdated and liable to falsification as pseudoscience:

"Science (from the Latin word scientia, meaning "knowledge") is a systematic enterprise that builds and organizes knowledge in the form of testable explanations and predictions about the universe".

"Science, any system of knowledge that is concerned with the physical world and its phenomena and that entails unbiased observations and systematic experimentation. In general, a science involves a pursuit of knowledge covering general truths or the operations of fundamental laws".

"Science is the intellectual and practical activity encompassing the systematic study of the structure and behaviour of the physical and natural world through observation and experiment".

[Real] Science is a systematic enterprise that builds and organizes the world's data, information or knowledge in the form of causal laws, explanations and predictions about the world and its phenomena.

Real science is divided into the natural sciences (e.g., biology, chemistry, and physics), which study causal interactions and patterns in nature; the cognitive sciences (linguistics, neuroscience, artificial intelligence, philosophy, anthropology, and psychology); the social sciences (e.g., economics, politics, and sociology), which study causal interactions in individuals and societies; technological sciences (applied sciences, engineering and medicine); the formal sciences (e.g., logic, mathematics, and theoretical computer science). Scientific research involves using the causal scientific method, which seeks to causally explain the events of nature in a reproducible way.

Observation and Experimentation, Simulation and Modelling, all are important in Real Science to help establish dynamic causal relationships (to avoid the correlation fallacy).

Real Statistics vs Fake Statistics

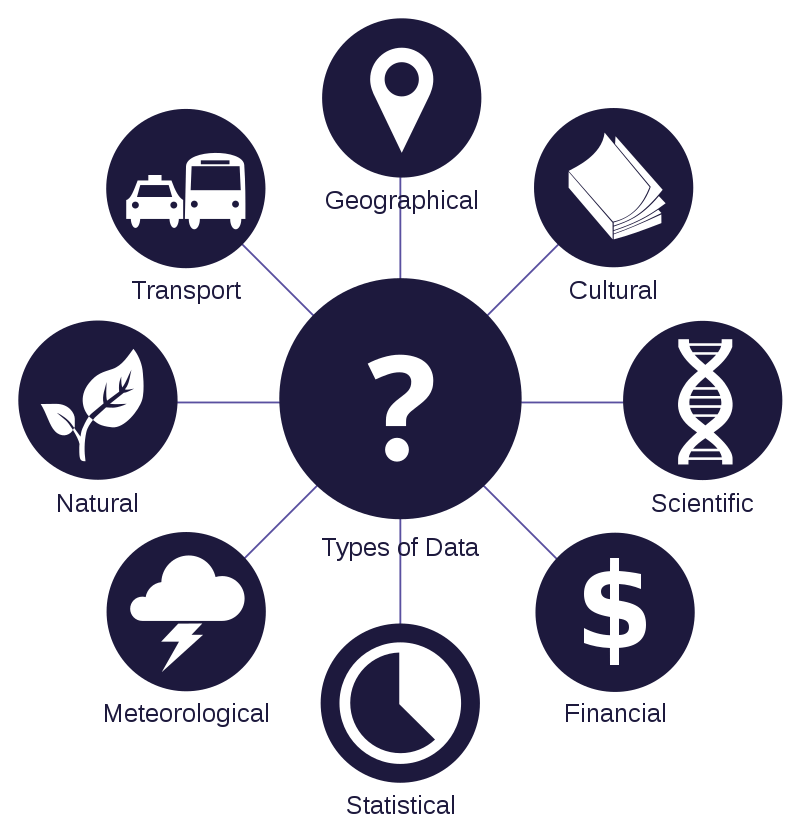

Statistics is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. Data are the facts and figures that are collected, analyzed, and summarized for presentation and interpretation. Data may be classified as either quantitative or qualitative. Quantitative data measure either how much or how many of something, and qualitative data provide labels, or names, for categories of like items.

In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied.

A standard statistical procedure involves the collection of data leading to a test of the relationship between two statistical data sets, or a data set and synthetic data drawn from an idealized model. A hypothesis is proposed for the statistical relationship between the two data sets, and this is compared as an alternative to an idealized null hypothesis of no relationship between two data sets. Rejecting or disproving the null hypothesis is done using statistical tests that quantify the sense in which the null can be proven false, given the data that are used in the test. Working from a null hypothesis, two basic forms of error are recognized: Type I errors (null hypothesis is falsely rejected giving a "false positive") and Type II errors (null hypothesis fails to be rejected and an actual relationship between populations is missed giving a "false negative").

Real Statistics is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of causal data, a set of values of causal (qualitative or quantitative) variables about entity features (data units, persons, objects, etc). With n data units (individuals) and m variables (age, gender, marital status, or annual income), the data set would have a (n × m) matrix of data items.

Or, science of making causal inferences of the parameters of some populations on the basis of causal information obtained from randomly selected samples.

So, if data is the new oil of the digital economy, causal data is the new engine of the digital economy.

All types of data, as used in scientific research, businesses management (e.g., sales data, revenue, profits, stock price), finance, governance (e.g., crime rates, unemployment rates, literacy rates; censuses of the number of people), etc., has their real value as causal information.

Causal data could be measured, collected, tabulated, reported, analyzed, visualized as graphs, tables or images, represented, or coded, all with a view of causal inferences of future causal trends. Health care, biology, chemistry, physics, education, engineering, business, economics, computer science, machine deep learning still make extensive use of statistical inference instead of causal inferences.

Again, variables, as dependent and independent variables, are key constructs in mathematical modeling, statistical modeling and experimental sciences.

The statistics synonyms for an independent variable include a "predictor variable", "regressor", "covariate", "manipulated variable", "explanatory variable", "extraneous variables", "exposure variable", "risk factor", "feature" (in machine learning and pattern recognition), "input variable", "exogenous variable" or "control variable".

The statistics synonyms for a independent variable include a "response variable", "regressand", "criterion", "predicted variable", "measured variable", "explained variable", "experimental variable", "endogenous variable" , "responding variable", "outcome variable", "output variable", "target" or "label".

Dependent variables' values are considered as dependent, by some law or rule (e.g., by a mathematical function), on the values of other variables, going as independent variables. Some common independent variables are time, space, and mass, like E = mcc.

Different communities use different names, while in the real statistics one thinks of independent and dependent variables in terms of cause and effect: an independent variable is the variable you view as the cause, while a dependent variable is the effect. In an experiment, you condition, control or manipulate the independent variable and measure the outcome in the dependent variable, while taking into account interaction or moderation variables.

In real statistics, a causal interaction is critical as the relationship among three or more variables, describing "a situation in which the effect of one causal variable on an outcome depends on the state of a second causal variable (that is, when effects of the two causes are not additive)". Such an interaction variable or interaction feature is "a variable constructed from an original set of variables to try to represent either all of the interaction present or some part of it". Its example of a moderating variable is characterized statistically as an interaction: like as a categorical (e.g., sex, ethnicity, class) or quantitative (e.g., level of reward) variable affects the direction and/or strength of the relation between dependent and independent variables.

Now, a new type new statistics is emerging as Real, Causal Statistics, where many statistical constructs, as correlation/regression coefficients, inferences, or null-hypothesis significance testing (NHST), are no more valid and falsified.

A statistical association or correlation between independent and dependent variables simply indicates that the values vary together. It does not necessarily suggest that changes in one variable cause changes in the other variable in terms of magnitude and direction.

If correlation does not prove causation, what statistical test do you use to assess causality? No statistical analysis can make that determination.

Analyzing REAL causality is the key subject of REAL statistics.

It embraces 5 hierarchical levels of measurement referring to the relationship among the values that are assigned to the attributes for an entity variable and helping humans and machines interpret the data from the causal variables. These different types of scales present a hierarchy/classification that describes the nature of causal information within the values assigned to variables. They are "nominal", "ordinal", "interval", "ratio", "cardinal", all unifying both "qualitative" (which are described by the categorical and ordinal type) and "quantitative" (all the rest of the scales).

In all, the world is the totality of all possible causes and effects, as the changes of entities and states of affairs, events, forces, and phenomena, bound by a globally distributed multidirectional causal nexus, as processes, interactions and relationships.

Some key operationalizations of universal causal networks could be the following natural and technological complexities:

- the universe, the totality of space and times and matter and energy, with all causative fundamental interactions;

- the human brain, a network of neural networks that connects billions of neurons via trillions synapses;

- the internet, a network of networks that causally connects billions of digital devices worldwide

What Determines the World: Causality as the Life-or-Death Relationship

Formally, the world is modeled as a global cyclical symmetric graph/network of virtually unlimited nodes/units (entity variables, data, particles, atoms, neurons, minds, organizations, etc.) and virtually unlimited interrelationships (real data patterns) among them.

It could be approximated with Undirected Graphical Models (UGM), Markov and Bayesian Network Models, Computer Networks, Social Networks, Biological Neural Networks, or Artificial neural networks (ANNs).

A Bayesian network (a Bayes network, belief network, or decision network; an influence diagram (ID) (a relevance diagram, decision diagram or a decision network), neural networks (natural and artificial), path analysis (statistics), Structural equation modeling (SEM), mathematical models, computer algorithms, statistical methods, regression analysis, causal inference, causal networks, knowledge graphs, semantic networks, scientific knowledge, methods and modeling and technology, all could and should represent the causal relationships, its networks, mechanisms, laws and rules.

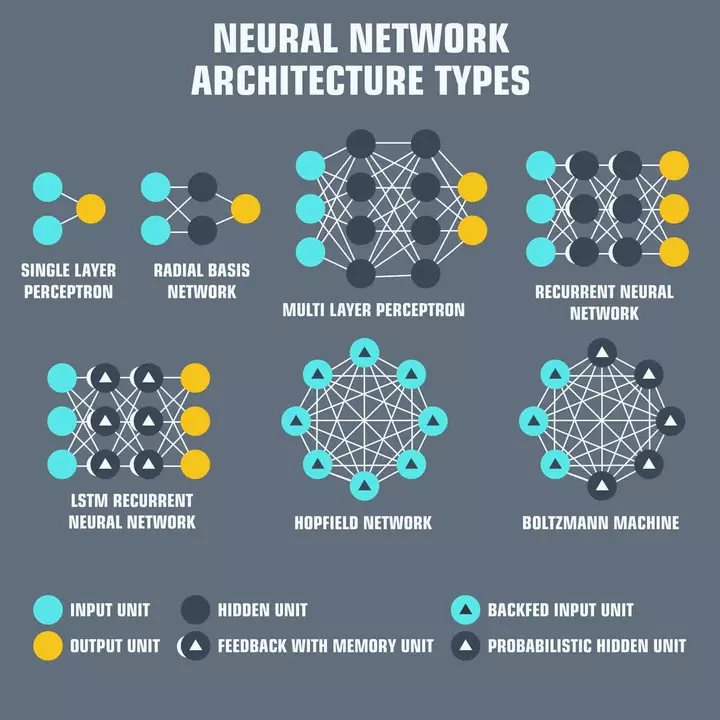

As an example, the NNs topologies, compiled by Fjodor van Veen from Asimov institute, are getting a real substance, but as causal NNs, as below:

The Causal Structure Learning and Causal Inference of the computational networks are the subject of Real AI/ML/DL platform.

True and Real AI embraces a Causal AI/ML/DL, operating with causal information, instead of statistical data patterns, performing causal inferences about causal relationships from [statistical] data, in the first place.

Current state-of-the-art correlation-based machine learning and deep learning have severe limitations in real dynamic environments failing to unlock the true potential of AI for humanity.

Real AI is a new category of intelligent machines that understand reality, both objective and subjective and mixed realities, its complex cause and effect relationships― a critical step towards true AI.

Real AI engine computes the real world as a whole and in parts [e.g., the causal nexus of various human domains, such as fire technology and human civilizations; globalization and political power; climate change and consumption; economic growth and ecological destruction; future economy, unemployment and global pandemic; wealth and corruption, perspectives on the world’s future, etc.]. RAI is critical in determining the perspectives on the country’s future as well, defining how to organize for the changes ahead in critical areas:

- Defeating COVID-19

- Fostering economic competitiveness and social mobility

- Advancing social, economic and racial equity

- Building a low-carbon smart economy

- Creating a sustainable society

The World of AI as a Great Unknown Unknown

AI is powering a dramatic change in every part of human life, in every society, economy and industry across the globe, emerging as a strategic general purpose technology.

Then it is most significant to know what intelligence is in general, with all its major kinds, technologies, systems and applications, and how it is all interrelated with reality and causality.

What is Real intelligence?

It is the topmost true intelligence dealing with reality in terms of the world models and data/information/knowledge representations for cognition and reasoning, understanding and learning, problem-solving, predictions and decision-making, and interacting with the environment.

An Intelligence is any entity which is modeling and simulating the world to effectively and sustainably interact with any environments, physical, natural, mental, social, digital or virtual. This is a common definition covering any intelligent systems of any complexity, human, machine, or alien intelligences.

What is state-of-the-art of artificial intelligence?

It is a man-made anthropomorphic intelligence, developed as simple rule-based systems, symbolic logical general AI and/or sub-symbolic statistical narrow AI. Such an AI is an automation technology that emulates human performance, typically by learning from it, to enhance existing applications and processes.

In AI, it is popular the concept of an intelligent agent (IA), which refers to an autonomous entity which acts, directing its activity towards achieving goals (i.e. it is an agent), upon an environment using observation through sensors and consequent actuators (i.e. it is intelligent). Intelligent agents may also learn or use knowledge to achieve their goals. They may be very simple or very complex. A reflex machine, such as a thermostat, is considered an example of an intelligent agent.

It is misleadingly considered that a goal-directed behavior is to be the essence of intelligence, formalized as an "objective function", negative or positive, a "reward function", a "fitness function", a "loss function", a "cost function", a "profit function", a "utility function", all mapping an event [or values of one or more variables] onto a real number intuitively representing some "cost" or "loss" or "reward".

What are machine learning, deep learning and artificial neural networks ?

Machine learning (ML) is a type of artificial intelligence (AI), viewed as a tabula rasa, having no innate knowledge, data patterns, rules or concepts, and which algorithms use historical data as input to predict new output values. Its most advanced deep learning techniques assume a “blank slate” state, and that all specific narrow “intelligence” can be rote learned from some training data. Meantime, a newly-born mammal starts life with a level of built-in inherited knowledge-experience. It stands within minutes, knows how to feed almost immediately, and walks or runs within hours.

Again, the entire advance in deep learning is enabled by the inverse process of backpropagation algorithm, which allows large and complex neural networks to learn from training data. Hinton, Rumelhart, and Williams, published “Learning representations by back-propagating errors” in 1986. It took another 26 years before an increase in computing power, GPUs enabling the complex calculations required by backpropagation algorithms to be applied in parallel and the growth in “big data” enabling the use of that discovery at the scale seen today.

Deep learning employs neural networks of various architectures and structures to train a model to understand the linear and non-linear relationship between input data and output data. Non-linearity is a key differentiator from traditional machine learning models which are mostly linear in nature.

Recommendation engines, fraud detection, spam filtering, malware threat detection, business process automation (BPA), predictive maintenance, customer behavior analytics, and business operational patterns are common use cases for ML.

Many of today's leading companies, such as Apple, Microsoft, Facebook, Google, Amazon, Netflix, Uber, Alibaba, Baidu, Tencent, etc., make Deep ML a central part of their operations.

Deep learning is a type of machine learning and artificial intelligence that imitates the human brain. Deep learning is a core of data science, which includes statistics and predictive modeling. At its simplest, deep learning is a way to automate predictive analytics, which algorithms are stacked in a hierarchy of increasing complexity and abstraction. Unable to understand the concept of things, as feature sets, a computer program that uses DL algorithms should be shown a training set and sort through millions of videos, audios, words or images, patterns of data/pixels in the digital data, to label/classify the things.

Deep learning neural networks are an advanced machine learning algorithm, known as an artificial neural network, underpinning most deep learning models, also referred to as deep neural learning or deep neural networking.

DNNs come in many architectures, recurrent neural networks, convolutional neural networks, artificial neural networks and feedforward neural networks, etc., each has benefits for specific use cases. All function in similar ways -- by feeding data in and letting the model figure out for itself whether it has made the right interpretation or decision about given data sets. Neural networks involve a trial-and-error process, so they need massive amounts of data on which to train, but deep learning models can't train on unstructured data. Most of the data humans and machines create, 80% is unstructured and unlabeled.

Today's AAI is over-focused on specific behavioral traits [BEHAVIORISM] or cognitive functions/skills/capacities/capabilities [MENTALISM], specific problem-solving in specific domains and there is still a very long way for such an AI to go in the future.

The key problem with AAI is “Will It Ever Be Possible to Create a General AI with Sentience and Conscience and Consciousness?’.

And its methodological mistake that AI starts blank, acquiring knowledge as the outside world is impressed upon it. What is known as the tabula rasa theory that any intelligence, natural or artificial, born or created "without built-in mental content, and therefore all knowledge about reality comes from experience or perception" (Aristotle, Zeno of Citium, Avicenna, Lock, psychologists and neurobiologists, and AAI/ML/DNN researchers).

Ibn Sina argued that the human intellect at birth resembled a tabula rasa, a pure potentiality that is actualized through education and comes to know". In Locke's philosophy, at birth the (human) mind is a "blank slate" without rules for processing data, and that data is added and rules for processing are formed solely by one's sensory experiences.

It was presented as "A New Direction in AI. Toward a Computational Theory of Perceptions" [Zadeh (2001)]. Here "at the moment when the senses of the outside world of AI-system begin to function, the world of physical objects and phenomena begins to exist for this intelligent system. However, at the same moment of time the world of mental objects and events of this AI-system is empty. This may be considered as one of the tenets of the model of perception. At the moment of the opening of perception channels the AI-system undergoes a kind of information blow. Sensors start sending the stream of data into the system, and this data has not any mapping in the personal world of mental objects which the AI-system has at this time". Subjective Reality and Strong Artificial Intelligence

Here is the mother of all mistakes: "Sensors start sending the stream of data into the system, and this data has ALL mapping in the personal world of mental objects which the AI-system has at this time".

Or, at very birth/creation the (human) mind or AI has the prior/innate/encoded/programmed knowledge of reality with causative rules for processing world's data, and an endless new stream of data is added to be processed by innate master algorithms.

In fact, the tabula rasa model of AI has been refuted by some successful ML game-playing systems as AlphaZero, a computer program developed by DeepMind to master the games of chess, shogi and go. It achieved superhuman performance in various board games using self-play and tabula rasa reinforcement learning, meaning it had no access to human games or hard-coded human knowledge about either game, BUT being given the rules of the games.

Again, Reinforcement learning is the problem faced by an agent that learns behavior through trial-and-error interactions with a dynamic environment. With all varieties, associative, deep, safe, or inverse, it differs from supervised learning in not needing labelled input/output pairs be presented, and in not needing sub-optimal actions to be explicitly corrected, focusing on finding a balance between exploration (of uncharted territory) and exploitation (of current knowledge).

All in all, the real AI model covers abstract intelligent agents (AIA) paradigm with the tabula rasa model of AI, with all their real world implementations as computer systems, biological systems, human minds, superminds [organizations], or autonomous intelligent agents.

In this rational-action-goal paradigm, an IA possesses an internal "model" of its environment, encapsulating all the agent's beliefs about the world.

What is Natural intelligence?

It is the intelligence created by nature, natural evolutionary mechanisms, as biological intelligence embodied as the brain, animal and human and any hypothetical alien intelligence.

So, what are true real and genuine AI and fake false and fictitious AI?

When we all hear about artificial intelligence, the first thing to think of is human-like machines and robots, from Frankenstein's creature to Skynet's synthetic intelligence, that wreak havoc on humans and Earth. And many people still think it as a dystopian sci-fi far away from the truth.

Humanity has two polar future worlds to be taken over by two polar AIs.

The first, mainstream paradigm is a AAI (Artificial, Anthropic, Applied, Automated, Weak and Narrow "Black Box" AI), which is promoted by the big tech IT companies and an army of AAI researchers and scientists, developers and technologists, businessmen and investors, as well as all sorts of politicians and think tankers. Such an AI is based on the principle that human intelligence can be defined in a way that a machine can easily mimic it and execute tasks, from the most simple to most complex.

An example is Nvidia-like AAI, which has recently released new Fake AI/ML/DL software, hardware.

Conversational FAI framework. Real-time natural language understanding will transform how we interact with intelligent machines and applications

The framework enables developers to use pre trained deep learning models and software tools to create conversational AI services, such as advanced translation or transcription models, specialized chatbots and digital assistants.

FAI supercomputing. Nvidia introduced a new version of DGX SuperPod, Nvidia's supercomputing system that the vendor bills as made for AI workloads.

The new cloud-native DGX SuperPod uses Nvidia's Bluefield-2 DPUs, unveiled last year, providing users with a more secure connection to their data. Like Nvidia's other DGX SuperPods, this new supercomputer will contain at least 20 Nvidia DGX A100 systems and Nvidia InfiniBand HDR networking.

AI chipmakers can support three key AI workloads: data management, training and inferencing.

The tech giants -- Google, Microsoft, Amazon, Apple and Facebook -- have also created chips made for Fake AI/ML/DL, but these are intended for their own specific applications

Real AI vs. Fake AI

“I have always been convinced that the only way to get artificial intelligence to work is to do the computation in a way similar to the human brain. That is the goal I have been pursuing. We are making progress, though we still have lots to learn about how the brain actually works.” — Geoffrey Hinton

“My view is: throw it all away and start again.” — Geoffrey Hinton

Why so? A badly wrong assumption AI = ML = DL = Human Brain = AGI = Strong AI

Real AI vs. Artificial General Intelligence (AGI or Strong AI)

How Far Are We From Achieving Artificial General Intelligence? or How can we determine if computers have acquired general intelligence? Such-like questions are becoming a main topic today.

It was first introduced as the “imitation game”, later known as the “Turing test”, in the article “Computing Machinery and Intelligence” (Turing, 1950).

The Turing test is restricted to a blind language communication, missing causal relationships; for computers to pass this test, it'd handle causal knowledge. A basic requirement to pass the Turing test is that the computer is able to handle causal things, and be able to intervene in the world.

Artificial General Intelligence (AGI or Strong AI), "the hypothetical ability of an intelligent agent to understand or learn any intellectual task that a human being can", is a acausal construct, unreal and, therefore, non-achievable.

Instead of modeling the world itself, AI systems, as artificial general intelligence systems, are wrongly designed with the human brain as their reference, of which we know little, if nothing.

Then the experts' predictions, like as artificial intelligence to be achieved as early as by 2030 or emergence of AGI or the singularity by the year 2060, have no any use, sense or value.

McKinsey has even issued "An executive primer on artificial general intelligence with the annotation": "While human-like artificial general intelligence may not be imminent, substantial advances may be possible in the coming years. Executives can prepare by recognizing the early signs of progress".

Again, there is only one valid form of AI, Artificial Real Intelligence, or real AI having a consistent, coherent, comprehensive causal model of reality.

Real AI simulates/models/represents/maps/understands the world of reality, as objective and subjective worlds, digital reality and mixed realities, its cause and effect relationships, to effectively interact with any environments, physical, mental or virtual.

It overrules the fragmentary models of AI, as narrow and weak AI vs. strong and general AI, statistical ML/DL vs. symbolic logical AI.

The true paradigm is a RAI (Real Causal Explainable and "White Box" AI), or the real, true, genuine and autonomous cybernetic intelligence vs the extant fake, false and fictitious anthropomorphic intelligence.

An example of Real Intelligence is a hybrid human-AI Technology, Man-Machine Superintelligence, which is mostly unknown to the big tech and AAI army. Its goal is to mimic reality and mentality, human cognitive skills and functions, capacities and capabilities and activities, in computing machinery.

The Man-Machine Superintelligence as [RealAI] is all about 5 interrelated universes, as the key factors of global intelligent cyberspace:

- reality/world/universe/nature/environment/spacetime, as the totality of all entities and relationships;

- intelligence/intellect/mind/reasoning/understanding, as human minds and AI/ML models;

- data/information/knowledge universe, as the world wide web; data points, data sets, big data, global data, world’s data; digital data, data types, structures, patterns and relationships; information space, information entities, common and scientific and technological knowledge;

- software universe, as the web applications, application software and system software, source or machine codes, as AI/ML codes, programs, languages, libraries;

- hardware universe, as the Internet, the IoT, CPUs, GPUs, AI/ML chips, digital platforms, supercomputers, quantum computers, cyber-physical networks, intelligent machinery and humans

How it is all represented, mapped, coded and processed in cyberspace/digital reality by computing machinery of any complexity, from smart phones to the internet of everything and beyond.

AI is the science and engineering of reality-mentality-virtuality [continuum] cyberspace, its nature, intelligent information entities, models, theories, algorithms, codes, architectures and applications.

Its subject is to develop the AI Cyberspace of physical, mental and digital worlds, the totality of any environments, physical, mental, digital or virtual, and application domains.

RAI is to represent and model, measure and compute, analyse, interpret, describe, predict, and prescribe, processing unlimited amounts of big data, transforming unstructured data (e.g. text, voice, etc.) to structured data ( e.g. categories, words, numbers, etc. ), discovering generalized laws and causal rules for the future.

RAI as a symbiotic hybrid human-machine superintelligence is to overrule the extant statistical narrow AI with its branches, such as machine learning, deep learning, machine vision, NLP, cognitive computing, etc.

AAI: Opportunities and Risks

Unlike RAI, AAI presents an existential danger to humanity if it progresses as it is, as specialized superhuman automated machine learning systems, from task-specific cognitive robots to professional bots to self-driving autonomous transport.

The list of those who have pointed to the risks of AI numbers such luminaries as Alan Turing, Norbert Wiener, I.J. Good, Marvin Minsky, Elon Musk, Professor Stephen Hawking and even Microsoft co-founder Bill Gates.

“Mark my words, AI is far more dangerous than nukes.” With artificial intelligence we are summoning the demon.Tesla and SpaceX founder Elon Musk

“Unless we learn how to prepare for, and avoid, the potential risks, “AI could be the worst event in the history of our civilization.” “The development of full artificial intelligence could spell the end of the human race….It would take off on its own, and re-design itself at an ever increasing rate." "Humans, who are limited by slow biological evolution, couldn't compete, and would be superseded." the late physicist Stephen Hawking

“Computers are going to take over from humans, no question...Will we be the gods? Will we be the family pets? Or will we be ants that get stepped on?” Apple co-founder Steve Wozniak

“I am in the camp that is concerned about super intelligence,” Bill Gates

AI has the ability to “circulate tendentious opinions and false data that could poison public debates and even manipulate the opinions of millions of people, to the point of endangering the very institutions that guarantee peaceful civil coexistence.” Pope Francis, “The Common Good in the Digital Age”

A human-like and human-level AAI is a foundationally and fundamentally wrong idea, even more harmful than human cloning. Ex Machina’s Ava is a good lesson NOT to copy the biological brain/human intelligence.

A humanoid robot with human-level artificial general intelligence (AGI) could be of 3 types:

- The human-like zombie mindless AI reaches human-level intelligence using some combination of brute force search techniques and machine learning with big data, exploiting senses and computational capacity unavailable to humans.

- The human-like mindful AI mimicking exactly the neural processing in the human brain, with a whole brain emulation: "copying the brain of a specific individual – scanning its structure in nanoscopic detail, replicating its physical behaviour in an artificial substrate, and embodying the result in a humanoid form".

- The superintelligent AI exceeds humans in all respects.

In all 3 scenarios, humans are doomed to occupy a subordinate position in the space of possible minds as pictured by the H-C plane [the human-likeness and capacity for consciousness of various real entities, both natural and artificial] unless to go for the ‘Void of Inscrutability’, a human-unlike real man-AI superintelligence.

RISKS OF NON-REAL ARTIFICIAL INTELLIGENCE

- AI, Robotics and Automation-spurred massive job loss.

- Privacy violations, a handful of big tech AI companies control billions of minds every day manipulating people’s attention, opinions, emotions, decisions, and behaviors with personalized information.

- 'Deepfakes'

- Algorithmic bias caused by bad data.

- Socioeconomic inequality.

- Weapons automatization, AI-powered weaponry, a global AI arms race .

- Malicious use of AI, threatening digital security (e.g. through criminals training machines to hack or socially engineer victims at human or superhuman levels of performance), physical security (e.g. non-state actors weaponizing consumer drones), political security (e.g. through privacy-eliminating surveillance, profiling, and repression, or through automated and targeted disinformation campaigns), and social security (misuse of facial recognition technology in offices, schools and other venues).

- Human brains hacking and replacement.

- Destructive superintelligence — aka artificial general human-like intelligence

“Businesses investing in the current form of machine learning (ML), e.g. AutoML, have just been paying to automate a process that fits curves to data without an understanding of the real world. They are effectively driving forward by looking in the rear-view mirror,” says causaLens CEO Darko Matovski.

Anthropomorphism in AI, the attribution of human-like feelings, mental states, and behavioral characteristics to computing machinery, unlike inanimate objects, animals, natural phenomena and supernatural entities, is an immoral enterprise.

For all AAI applications, it implies building AI systems based on embedded ethical principles, trust and transparency. This results in all sorts of Framework of ethical aspects of artificial intelligence, robotics and related technologies, as proposed by the EC, EU guidelines on ethics in artificial intelligence: Context and implementation.

Such a human-centric approach to human-like artificial intelligence... "highlights a number of ethical, legal and economic concerns, relating primarily to the risks facing human rights and fundamental freedoms. For instance, AI poses risks to the right to personal data protection and privacy, and equally so a risk of discrimination when algorithms are used for purposes such as to profile people or to resolve situations in criminal justice. There are also some concerns about the impact of AI technologies and robotics on the labour market (e.g. jobs being destroyed by automation). Furthermore, there are calls to assess the impact of algorithms and automated decision-making systems (ADMS) in the context of defective products (safety and liability), digital currency (blockchain), disinformation-spreading (fake news) and the potential military application of algorithms (autonomous weapons systems and cybersecurity). Finally, the question of how to develop ethical principles in algorithms and AI design".

Now, as to Ethics guidelines for trustworthy AI, trustworthy AI should be:

(1) lawful - respecting all applicable laws and regulations

(2) ethical - respecting ethical principles and values

(3) robust - both from a technical perspective while taking into account its social environment

Again, all the mess-up starts from its partial human-centric definition:

“Artificial intelligence (AI) refers to systems that display intelligent behaviour by analysing their environment and taking actions – with some degree of autonomy – to achieve specific goals. AI-based systems can be purely software-based, acting in the virtual world (e.g. voice assistants, image analysis software, search engines, speech and face recognition systems) or AI can be embedded in hardware devices (e.g. advanced robots, autonomous cars, drones or Internet of Things applications).”

Or, updated definition of AI. “Artificial intelligence (AI) systems are software (and possibly also hardware) systems designed by humans that, given a complex goal, act in the physical or digital dimension by perceiving their environment through data acquisition, interpreting the collected structured or unstructured data, reasoning on the knowledge, or processing the information, derived from this data and deciding the best action(s) to take to achieve the given goal. AI systems can either use symbolic rules or learn a numeric model, and they can also adapt their behaviour by analysing how the environment is affected by their previous actions. As a scientific discipline, AI includes several approaches and techniques, such as machine learning (of which deep learning and reinforcement learning are specific examples), machine reasoning (which includes planning, scheduling, knowledge representation and reasoning, search, and optimization), and robotics (which includes control, perception, sensors and actuators, as well as the integration of all other techniques into cyber-physical systems).”

Why creating ML/AI based on human brains is not moral, but it will never be successful could be seen from the deep learning NNs, limitations and challenges, as indicated below:

DL neural nets are good at classification and clustering of data, but they are not great at other decision-making or learning scenarios such as deduction and reasoning.

The biggest limitation of deep learning models is they learn through observations. This means they only know what was in the data on which they trained. If a user has a small amount of data or it comes from one specific source that is not necessarily representative of the broader functional area, the models will not learn in a way that is generalizable.

The hardware requirements for deep learning models can also create limitations. Multicore high-performing graphics processing units (GPUs) and other similar processing units are required to ensure improved efficiency and decreased time consumption. However, these units are expensive and use large amounts of energy. Other hardware requirements include random access memory and a hard disk drive (HDD) or RAM-based solid-state drive (SSD).

Other limitations and challenges include the following:

Deep learning requires large amounts of data. Furthermore, the more powerful and accurate models will need more parameters, which, in turn, require more data.

Once trained, deep learning models become inflexible and cannot handle multitasking. They can deliver efficient and accurate solutions but only to one specific problem. Even solving a similar problem would require retraining the system.

There are many kinds of neural networks that form a sort of "zoo" with lots of different species and creatures for various specialized tasks. There are neural networks such as FFNNs, RNNs, CNNs, Boltzmann machines, belief networks, Hopfield networks, deep residual networks and other various types that can learn different kinds of tasks with different levels of performance.

Another major downside is that neural networks are a "black box" -- it's not possible to examine how a particular input leads to an output in any sort of explainable or transparent way. For applications that require root-cause analysis or a cause-effect explanation chain, this makes neural networks not a viable solution. For these situations where major decisions must be supported by explanations, "black box" technology is not always appropriate or allowed.

Any application that requires reasoning -- such as programming or applying the scientific method -- long-term planning and algorithmlike data manipulation is completely beyond what current deep learning techniques can do, even with large data.

It might be a combination of supervised, unsupervised and reinforcement learning that pushes the next breakthrough in AI forward.

Deep learning examples. Because deep learning models process information in ways similar to the human brain, they can be applied to many tasks people do. Deep learning is currently used in most common image recognition tools, natural language processing (NLP) and speech recognition software. These tools are starting to appear in applications as diverse as self-driving cars and language translation services.

Use cases today for deep learning include all types of big data analytics applications, especially those focused on NLP, language translation, medical diagnosis, stock market trading signals, network security and image recognition.

Machine Intelligence and Causation

It is a common knowledge that current ML technology fails when applied to dynamic, complex systems. It produces static models that overfit to yesterday’s world. The models are data-hungry and unintelligible to humans, as listed below:

- Static models

- Historic correlations

- Black box

- Observational data only

- Predictions only

And Causal AI comes with the competitive features

- Dynamic models

- Causal drivers

- Explainable

- Understands business context

- Predictions, interventions & counterfactuals

Causal AI is a new category of intelligent machines that understand cause and effect ― a major step towards true AI.

It is widely recognized that Understanding Causality Is the Next Challenge for Machine Learning. Deep neural nets do not interpret cause-and effect, or why the associations and correlations exist.

Teaching machines to know/understand "why" is to transfer their causal data patterns [knowledge, rules, laws, generalizations] to other environments.

True causality knowledge, a deep understanding of cause and effect, is critical for a Causal AI/ML/DL, like in:

Causal AI – machine learning for the real world

A causal AI platform, keeping the advantages of comprehensive digitization and automation – one of the key benefits of machine learning – allowing zetabytes of datasets to be cleaned, sorted and monitored simultaneously, is to combine all this data with causal data models and causal/explainable insights – traditionally the sole competency of domain experts.

Real AI as a Global Predictor

Causal AI machine is powerful not only to explain, but to predict, forecast or estimate [causal] timelines of events, human life, societies, nature and the universe.

Today, future is largely unpredictable, we are even unaware of the number of epidemic ways to come.

On a small practical scale, we have predictive analytics with its machine deep learning models, exploiting pattern recognition, to analyze current and historical facts to make predictions about future.

As parts of predictive techniques, there are a lot of forecasting methods, qualitative and quantitative, added up with strategic foresight or futures [futures studies, futures research or futurology] with no big utility. It all revolves around pattern-based understanding of past and present, thus and to explore the possibility of future events and trends.

On a large scale, the nature timeline is a big mystery even afterwards, not mentioning the universe timeline, where we have poor ideas about its history, far future and ultimate fate.

Summing up

Real [Causal] AI as a Synthesized Man-Machine Intelligence and Learning (MIL) is one of the greatest strategic innovations in all human history. It is fast emerging as an integrating general purpose technology (GPT) embracing all the traditional GPTs, as electricity, computing, and the internet/WWW, as well as the emerging technologies, Big Data, Cloud and Edge Computing, ML, DL, Robotics, Smart Automation, the Internet of Things, biometrics, AR (augmented reality)/VR (virtual reality), blockchain, NLP (natural language processing), quantum computing, 5-6G, bio-, neuro-, nano-, cognitive and social networks technologies.

But today's narrow, weak and automated AI of Machine Learning and Deep Learning, as implementing human brains/mind/intelligence in machines that sense, understand, think, learn, and behave like humans, is an existential threat to the human race by its anthropic conception and technology, strategy and policy.

The Real AI is to merge Artificial Intelligence (Weak AI, General AI, Strong AI and ASI) and Machine Learning (Supervised learning, Unsupervised learning, Reinforcement learning or Lifelong learning) as the most disruptive technologies for creating real-world man-machine super-intelligent systems.

Explainable Artificial Intelligence (XAI)

The Explainable AI (XAI) program aims to create a suite of machine learning techniques that:

- Produce more explainable models, while maintaining a high level of learning performance (prediction accuracy); and

- Enable human users to understand, appropriately trust, and effectively manage the emerging generation of artificially intelligent partners.