Comments

- No comments found

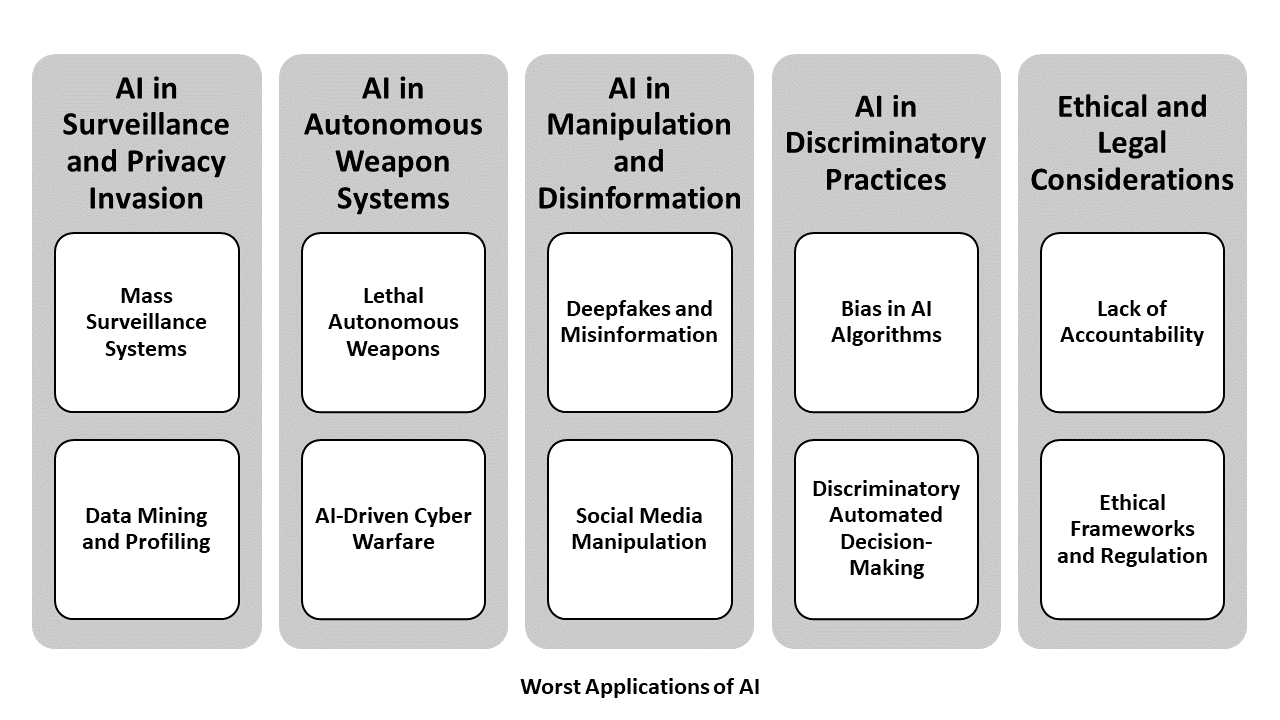

Artificial Intelligence (AI) has undoubtedly brought numerous benefits across various industries, enhancing efficiency, accuracy, and innovation.

Not all applications of AI have been positive. Some uses raise significant ethical, social, and legal concerns. We will explore some of the worst applications of AI, examining the potential harms and negative impacts associated with these technologies. By understanding these issues, we can better navigate the ethical landscape of AI and work towards mitigating its adverse effects.

Mass Surveillance Systems

One of the most controversial uses of AI is in mass surveillance systems. Governments and private organizations have increasingly adopted AI-powered facial recognition and tracking technologies for monitoring large populations. While these systems can enhance security and aid law enforcement, they also pose serious privacy concerns. The capability to track individuals' movements and behaviors without their consent infringes on privacy rights and can lead to authoritarian abuses.

In some cases, AI surveillance systems have been used to suppress dissent, monitor political opponents, and discriminate against certain groups. The lack of transparency and accountability in the deployment of these technologies exacerbates these issues, as individuals often have little recourse to challenge or understand the data being collected about them.

Data Mining and Profiling

AI's ability to process and analyze vast amounts of data has led to widespread data mining and profiling practices. Companies and organizations use AI algorithms to collect and analyze personal data, often without explicit consent. This data is then used to create detailed profiles of individuals, which can be exploited for targeted advertising, influencing consumer behavior, and even manipulating public opinion.

The misuse of personal data through AI-driven profiling not only raises privacy concerns but also risks reinforcing biases and stereotypes. For example, profiling based on browsing history or social media activity can lead to discrimination in areas like hiring, lending, and access to services.

Lethal Autonomous Weapons

Lethal autonomous weapons, also known as "killer robots," represent one of the most alarming applications of AI. These systems are capable of selecting and engaging targets without human intervention. While they offer potential military advantages, such as reducing human casualties and improving combat efficiency, they also pose significant ethical and legal challenges.

The primary concern with lethal autonomous weapons is the delegation of life-and-death decisions to machines. This raises questions about accountability and the potential for misuse or malfunction. Additionally, the deployment of such weapons could lead to an arms race, destabilizing international security and increasing the likelihood of conflicts.

AI-Driven Cyber Warfare

AI has also been employed in cyber warfare, where it is used to conduct sophisticated attacks on digital infrastructure. AI-driven tools can automate the identification and exploitation of vulnerabilities in computer systems, making cyber-attacks more efficient and difficult to defend against. These attacks can target critical infrastructure, financial systems, and even electoral processes, posing significant risks to national security and democratic institutions.

The use of AI in cyber warfare blurs the line between state and non-state actors, as advanced hacking tools can be developed and deployed by a wide range of entities. This complicates attribution and accountability, making it challenging to respond to and mitigate these threats.

Deepfakes and Misinformation

Deepfakes, AI-generated videos or images that appear realistic but are entirely fabricated, represent a growing concern in the realm of misinformation. These deepfakes can be used to spread false information, manipulate public opinion, and damage reputations. For example, deepfake videos can depict public figures saying or doing things they never did, potentially influencing elections or causing public unrest.

The ability of AI to create convincing falsehoods challenges the very notion of truth and authenticity in digital media. As deepfake technology becomes more accessible and sophisticated, the potential for misuse increases, threatening to undermine trust in media and institutions.

Social Media Manipulation

AI algorithms are extensively used on social media platforms to curate content and target advertisements. While these algorithms can enhance user experience, they can also be exploited to manipulate public opinion. For instance, AI-driven bots can amplify certain viewpoints, spread misinformation, and create echo chambers that reinforce biases.

The use of AI in social media manipulation has been implicated in numerous political and social controversies, from influencing election outcomes to exacerbating social divisions. The ability to micro-target individuals with tailored content based on their data raises ethical concerns about consent and the manipulation of democratic processes.

Bias in AI Algorithms

AI algorithms are only as good as the data they are trained on. When this data reflects societal biases, the resulting AI systems can perpetuate and even exacerbate discrimination. For example, AI tools used in hiring, lending, and law enforcement have been found to exhibit racial, gender, and socioeconomic biases.

In hiring, AI systems may favor candidates from certain backgrounds or with specific keywords in their resumes, leading to discrimination against minorities or women. In law enforcement, predictive policing algorithms can disproportionately target minority communities, reinforcing existing inequalities in the criminal justice system.

Discriminatory Automated Decision-Making

The use of AI in automated decision-making processes extends beyond hiring and law enforcement. It can affect access to healthcare, education, and social services. For instance, AI systems used in healthcare may prioritize treatment for certain groups over others based on biased data, while in education, automated grading systems may disadvantage students from specific demographics.

These discriminatory practices not only harm individuals but also contribute to systemic inequalities. Addressing bias in AI systems requires a commitment to transparency, fairness, and the inclusion of diverse perspectives in the development process.

Lack of Accountability

One of the biggest challenges in addressing the negative impacts of AI is the lack of accountability. It is often unclear who is responsible when an AI system causes harm—whether it is the developers, the users, or the system itself. This ambiguity complicates efforts to regulate and govern AI technologies effectively.

The concept of "algorithmic transparency" has been proposed as a solution, advocating for greater openness in how AI systems operate and make decisions. However, achieving this transparency is challenging, especially with complex machine learning models that are not easily interpretable.

Ethical Frameworks and Regulation

Establishing ethical frameworks and regulatory standards for AI is critical to mitigating its worst applications. This includes developing guidelines for the ethical use of AI, protecting privacy rights, and ensuring that AI systems are fair and non-discriminatory. However, creating and enforcing these regulations is a complex task that requires international cooperation and the involvement of diverse stakeholders.

The rapid pace of AI development often outstrips the ability of policymakers to keep up, leading to gaps in regulation and oversight. To address this, continuous engagement between technologists, ethicists, and regulators is necessary to adapt to the evolving landscape of AI technologies.

While AI has the potential to revolutionize various aspects of society positively, its worst applications highlight significant ethical, social, and legal challenges. From surveillance and privacy invasion to autonomous weapons and discriminatory practices, the misuse of AI technologies can lead to profound negative consequences. It is crucial to address these issues proactively, ensuring that AI development is guided by ethical principles and robust regulatory frameworks.

As we continue to integrate AI into our daily lives, it is essential to foster a culture of responsible innovation. This includes promoting transparency, accountability, and fairness in AI systems and protecting individuals' rights and well-being. By doing so, we can mitigate the risks associated with the worst applications of AI and harness its potential for the greater good.

Ahmed Banafa is an expert in new tech with appearances on ABC, NBC , CBS, FOX TV and radio stations. He served as a professor, academic advisor and coordinator at well-known American universities and colleges. His researches are featured on Forbes, MIT Technology Review, ComputerWorld and Techonomy. He published over 100 articles about the internet of things, blockchain, artificial intelligence, cloud computing and big data. His research papers are used in many patents, numerous thesis and conferences. He is also a guest speaker at international technology conferences. He is the recipient of several awards, including Distinguished Tenured Staff Award, Instructor of the year and Certificate of Honor from the City and County of San Francisco. Ahmed studied cyber security at Harvard University. He is the author of the book: Secure and Smart Internet of Things Using Blockchain and AI.

Leave your comments

Post comment as a guest