Comments

- No comments found

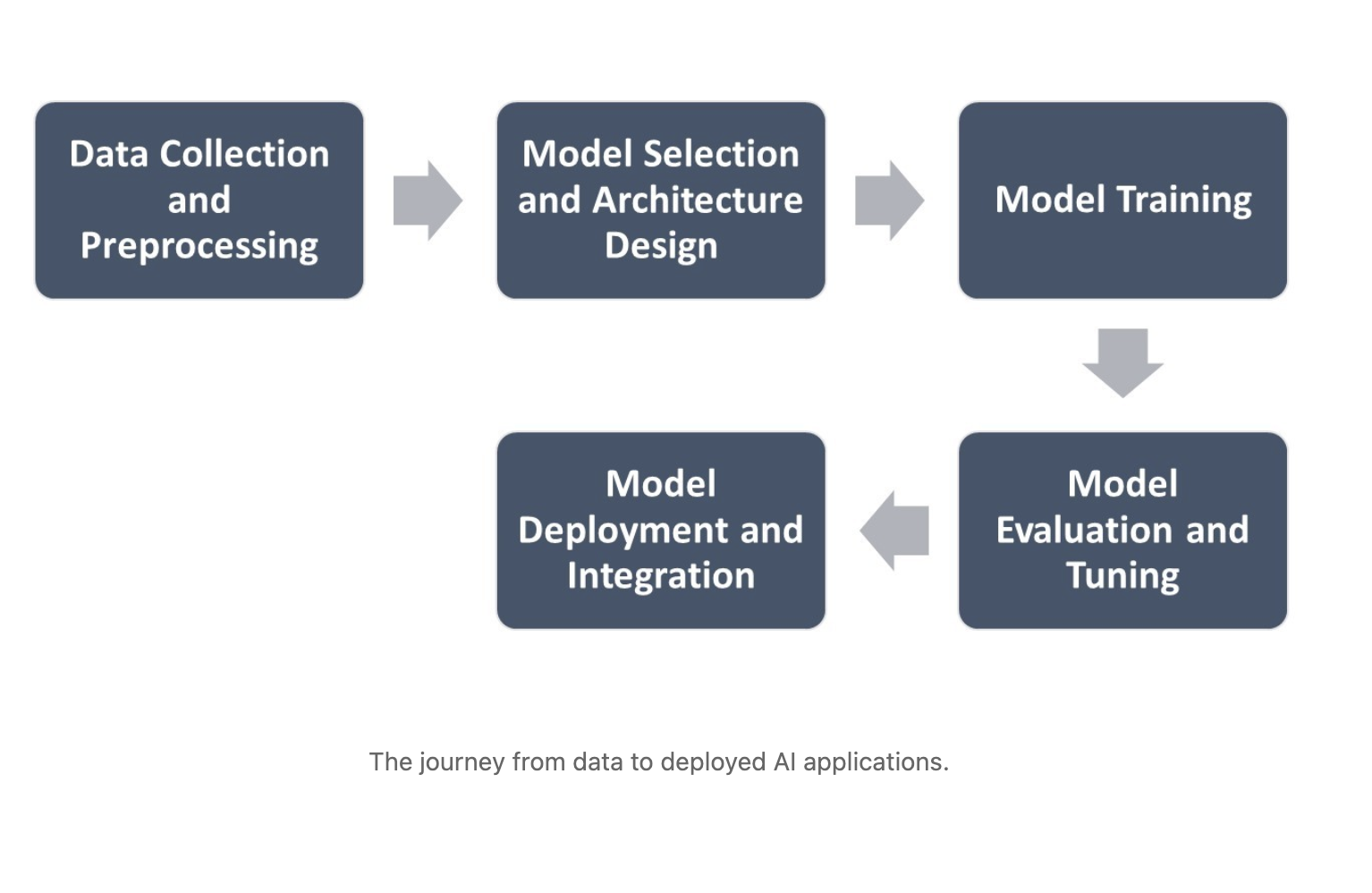

The ability to transform raw data into intelligent and actionable AI models is a crucial skill.

This multi-step process involves a combination of hardware resources, software tools, and mathematical techniques. Let's explore the journey from data to deployed AI applications.

Step 1: Data Collection and Preprocessing: The first step in creating an AI model is to gather and prepare the data that will be used for training. This involves:

a. Data Acquisition: Obtaining relevant data from various sources, such as databases, APIs, sensors, or manual data collection processes.

b. Data Cleaning: Handling missing values, removing duplicates, and addressing inconsistencies or errors in the data.

c. Data Transformation: Converting the data into a suitable format for model training, such as numerical encoding for categorical variables or normalization/scaling for numerical features.

d. Data Splitting: Dividing the dataset into training, validation, and testing subsets for model training, evaluation, and testing purposes.

Hardware: High-capacity storage systems (e.g., cloud storage, network-attached storage) and computing resources for data processing.

Software: Data wrangling tools (e.g., Python libraries like Pandas, Numpy), database management systems, data cleaning scripts.

Step 2: Model Selection and Architecture Design: Next, you need to choose an appropriate model architecture based on the problem domain and the characteristics of the data. Some common model types include:

· Feedforward Neural Networks

· Convolutional Neural Networks (CNNs) for image data

· Recurrent Neural Networks (RNNs) or Transformers for sequence data

· Generative Adversarial Networks (GANs) for generative modeling

The model architecture involves determining the number of layers, types of layers (e.g., convolutional, pooling, dense), activation functions, and other hyperparameters.

Hardware: Powerful CPU/GPU systems for training and experimenting with different model architectures.

Software: Deep learning frameworks (e.g., TensorFlow, PyTorch, Keras), model visualization tools.

Step 3: Model Training: Once the model architecture is defined, the next step is to train the model using the prepared training data. This typically involves:

a. Defining the Loss Function: Selecting an appropriate loss function (e.g., mean squared error for regression, cross-entropy for classification) to measure the model's performance during training.

b. Optimization Algorithm: Choosing an optimization algorithm (e.g., Stochastic Gradient Descent, Adam) to update the model's parameters and minimize the loss function.

c. Training Loop: Implementing a training loop that iteratively feeds the training data to the model, computes the loss, and updates the model's parameters using the optimization algorithm.

d. Regularization: Applying techniques like dropout, L1/L2 regularization, or early stopping to prevent overfitting and improve model generalization.

e. Validation: Periodically evaluating the model's performance on the validation set to monitor its progress and tune hyperparameters if necessary.

Hardware: High-performance computing clusters or cloud instances with multiple GPUs for parallel training.

Software: Deep learning frameworks, optimization libraries (e.g., TensorFlow Probability, PyTorch Lightning), data parallelization tools.

Mathematical Concepts:

- Loss Functions (e.g., mean squared error, cross-entropy)

- Optimization Algorithms (e.g., Stochastic Gradient Descent, Adam)

- Regularization Techniques (e.g., dropout, L1/L2 regularization)

Step 4: Model Evaluation and Tuning: After training, the model's performance is evaluated on the held-out test set to assess its generalization capability. This step involves:

a. Performance Metrics: Selecting appropriate evaluation metrics (e.g., accuracy, precision, recall, F1-score, mean squared error) based on the problem domain.

b. Evaluation on Test Set: Computing the chosen performance metrics on the test set to obtain an unbiased estimate of the model's performance.

c. Hyperparameter Tuning: If the model's performance is unsatisfactory, adjusting the hyperparameters (e.g., learning rate, batch size, number of layers) and retraining the model to improve its performance.

d. Model Comparison: Comparing the performance of different model architectures or techniques to select the best-performing model for the given problem.

Hardware: Dedicated testing/validation systems or cloud instances.

Software: Evaluation libraries (e.g., Scikit-learn, TensorFlow Evaluation), visualization tools (e.g., TensorBoard), hyperparameter tuning frameworks (e.g., Keras Tuner, Ray Tune).

Mathematical Concepts:

- Performance Metrics (e.g., accuracy, precision, recall, F1-score)

- Statistical Techniques for Model Comparison and Selection

Step 5: Model Deployment and Integration: Once a satisfactory model is obtained, it can be deployed and integrated into applications or production systems. This step may involve:

a. Model Serialization: Saving the trained model's architecture and weights to a file or database for later use or deployment.

b. Application Integration: Integrating the trained model into the target application or system, typically by exposing an API or embedding the model within the application's codebase.

c. Model Monitoring: Implementing monitoring mechanisms to track the model's performance in the deployed environment and detect any potential issues or performance degradation over time.

d. Model Updating: Establishing a process for retraining or updating the model with new data as it becomes available, ensuring the model remains up-to-date and accurate.

Throughout these steps, various mathematical concepts and techniques may be employed, such as:

· Linear algebra operations for matrix computations and neural network calculations

· Probability theory and statistics for loss functions and evaluation metrics

· Calculus and optimization techniques for gradient-based optimization algorithms

· Algorithms for data preprocessing, feature engineering, and dimensionality reduction

Ethical Considerations:

- Data Privacy and Security

- Bias Mitigation and Fairness

- Model Interpretability and Transparency

- Responsible AI Practices

While the specific details and implementations may vary depending on the problem domain, data characteristics, and chosen model architecture, the general process outlined above serves as a framework for creating AI models from data to applications.

It's important to note that the process of creating an AI model is iterative, and multiple iterations of model selection, training, evaluation, and tuning may be required to achieve satisfactory performance. Additionally, careful consideration must be given to ethical and responsible AI practices, such as ensuring data privacy, mitigating biases, and maintaining transparency and interpretability of the models.

The creation of AI models is an iterative process, often requiring multiple cycles of model selection, training, evaluation, and tuning to achieve satisfactory performance. Additionally, careful consideration must be given to ethical and responsible AI practices, such as ensuring data privacy, mitigating biases, and maintaining transparency and interpretability of the models.

By leveraging the appropriate hardware resources, software tools, and mathematical foundations, data scientists and machine learning engineers can effectively transform data into powerful AI models that can be integrated into a wide range of applications, driving innovation and solving complex problems across various domains.

Putting It All Together: An End-to-End Example

To illustrate the end-to-end process, let's consider a real-world example: building an AI model for image classification to automatically identify different types of vehicles.

Step 1: Data Collection and Preprocessing

- Acquire a dataset of labeled vehicle images from various sources (e.g., online repositories, crowdsourcing)

- Use data cleaning scripts (e.g., Python's OpenCV library) to remove corrupted or low-quality images

- Preprocess images (e.g., resizing, normalization) using libraries like TensorFlow's tf.data pipeline

Step 2: Model Selection and Architecture Design

- Choose a Convolutional Neural Network (CNN) architecture (e.g., ResNet, VGGNet) suitable for image classification tasks

- Design the model architecture, specifying the number of convolutional and dense layers, filter sizes, and activation functions

- Visualize and experiment with different architectures using tools like TensorBoard or Keras' model visualization utilities

Step 3: Model Training

- Split the preprocessed dataset into training, validation, and test sets

- Define an appropriate loss function (e.g., categorical cross-entropy) and optimization algorithm (e.g., Adam)

- Train the model on the training set using a GPU-accelerated deep learning framework like TensorFlow or PyTorch

- Apply regularization techniques (e.g., dropout, data augmentation) to improve generalization

- Monitor training progress and periodically evaluate on the validation set

Step 4: Model Evaluation and Tuning

- Evaluate the trained model's performance on the held-out test set using metrics like accuracy, precision, recall, and F1-score

- Utilize visualization tools (e.g., confusion matrices, saliency maps) to analyze the model's predictions and identify potential issues

- Experiment with different hyperparameters (e.g., learning rate, batch size) and model architectures using tuning frameworks like Keras Tuner or Ray Tune

- Select the best-performing model based on the evaluation results

Step 5: Model Deployment and Integration

- Serialize the trained model using TensorFlow Serving or ONNX for deployment

- Deploy the model to a cloud platform (e.g., AWS SageMaker, Google AI Platform) or edge device using containerization tools like Docker

- Integrate the deployed model into the target application or system (e.g., a vehicle recognition app) by exposing an API or embedding the model

- Implement monitoring and logging mechanisms to track the model's performance in production and detect potential issues or performance degradation over time

By following this end-to-end workflow and leveraging the appropriate hardware, software, and mathematical foundations, data scientists and machine learning engineers can create powerful AI models that can be successfully integrated into real-world applications, driving innovation and solving complex problems in domains like transportation, manufacturing, and beyond.

Ahmed Banafa is an expert in new tech with appearances on ABC, NBC , CBS, FOX TV and radio stations. He served as a professor, academic advisor and coordinator at well-known American universities and colleges. His researches are featured on Forbes, MIT Technology Review, ComputerWorld and Techonomy. He published over 100 articles about the internet of things, blockchain, artificial intelligence, cloud computing and big data. His research papers are used in many patents, numerous thesis and conferences. He is also a guest speaker at international technology conferences. He is the recipient of several awards, including Distinguished Tenured Staff Award, Instructor of the year and Certificate of Honor from the City and County of San Francisco. Ahmed studied cyber security at Harvard University. He is the author of the book: Secure and Smart Internet of Things Using Blockchain and AI.

Leave your comments

Post comment as a guest