Comments

- No comments found

Artificial Intelligence (AI) systems have made remarkable strides.

In recent years, AI systems have achieved human-level or even superhuman performance in specific tasks such as image recognition, natural language processing, and game-playing.

Despite these impressive achievements, current AI systems still struggle with fundamental aspects of intelligence that humans possess – the ability to reason about causality and handle uncertainty.

Causal Reasoning:

Causal reasoning is the process of understanding and inferring the underlying causal relationships between events or variables in a system. It involves identifying the root causes of observed phenomena, predicting the effects of interventions or actions, and reasoning counterfactually about alternative scenarios.

Most contemporary AI systems, particularly those based on deep learning and neural networks, excel at pattern recognition and correlational reasoning. They can identify statistical associations and patterns in data, but they often lack the ability to reason about the causal mechanisms that generate those patterns.

This limitation poses significant challenges in domains where causal understanding is crucial, such as scientific discovery, decision-making under uncertainty, and policy evaluation. For example, in healthcare, identifying mere correlations between symptoms and diseases is insufficient; understanding the underlying causal mechanisms is essential for effective diagnosis, treatment selection, and developing targeted interventions.

Reasoning under Uncertainty:

Real-world problems are often characterized by uncertainty, incomplete information, and noisy or ambiguous data. The ability to reason effectively under such conditions is a hallmark of human intelligence, allowing us to make informed decisions, formulate hypotheses, and adapt to changing environments.

However, many AI systems struggle with reasoning under uncertainty, particularly when faced with novel situations or scenarios that deviate from their training data. This limitation is partly due to the inherent brittleness of traditional AI approaches, which often rely on deterministic rules or fixed models that fail to capture the complexity and variability of real-world environments.

Probabilistic Reasoning:

To address the challenges of causal reasoning and reasoning under uncertainty, researchers have turned to probabilistic approaches and frameworks that explicitly model and reason about uncertainty. These include techniques such as Bayesian networks, probabilistic graphical models, and causal inference methods.

Bayesian networks, for example, provide a principled way to represent and reason about causal relationships and uncertainty using probability theory. They allow for the incorporation of prior knowledge, evidence, and observed data to update beliefs and make inferences about unobserved variables or the effects of interventions.

Causal Inference:

Causal inference is a field of study that focuses on deriving causal relationships from observational or experimental data. It involves techniques such as structural equation modeling, instrumental variables, and potential outcomes frameworks (e.g., Rubin's causal model).

By leveraging these methods, AI systems can move beyond mere correlational analysis and gain a deeper understanding of the causal mechanisms underlying observed phenomena. This is particularly valuable in domains such as epidemiology, economics, and policy evaluation, where causal knowledge is essential for informed decision-making and intervention design.

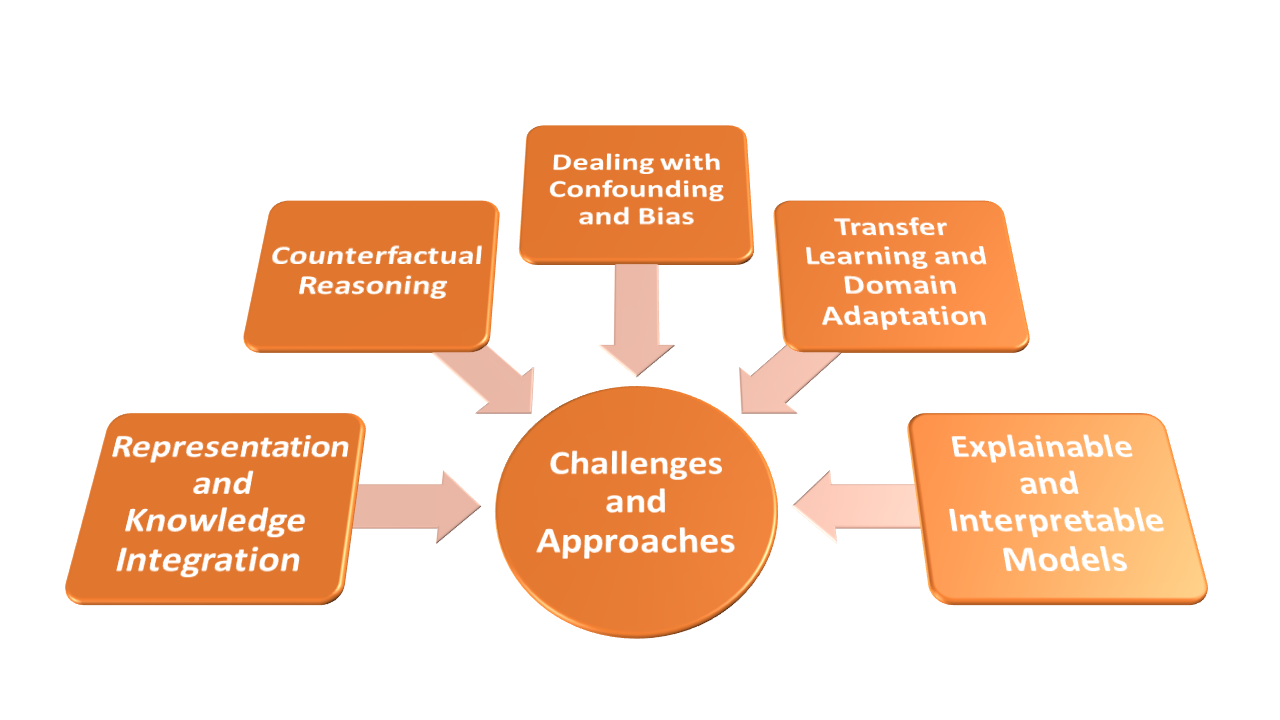

Challenges and Approaches:

Developing AI systems capable of robust causal reasoning and reasoning under uncertainty is a formidable challenge that requires addressing several key issues:

1. Representation and Knowledge Integration:

AI systems must be able to represent and integrate various forms of knowledge, including structured domain knowledge, empirical data, and causal relationships. This involves developing expressive and scalable representations that can capture the complexities of real-world systems and facilitate effective reasoning.

2. Counterfactual Reasoning:

The ability to reason counterfactually – considering alternative scenarios and assessing the potential outcomes of interventions or actions – is a critical component of causal reasoning. AI systems need to develop mechanisms for generating and evaluating counterfactual scenarios, drawing on causal models and domain knowledge.

3. Dealing with Confounding and Bias:

In observational data, confounding factors and biases can lead to spurious correlations or incorrect causal inferences. AI systems must incorporate techniques to identify and account for confounding variables, selection biases, and other sources of bias to ensure reliable causal conclusions.

4. Transfer Learning and Domain Adaptation:

AI systems should be able to leverage knowledge and causal models learned from one domain or context and adapt them to new scenarios or environments. This requires developing transfer learning and domain adaptation techniques that can effectively transfer causal knowledge while accounting for domain shifts and distribution changes.

5. Explainable and Interpretable Models:

As AI systems become more complex and are tasked with making critical decisions, it is essential that their reasoning processes and causal inferences are interpretable and explainable to humans. This not only promotes trust and accountability but also facilitates the validation and refinement of causal models.

Researchers have proposed various approaches to tackle these challenges, including:

- Causal Discovery Algorithms: These algorithms aim to automatically learn causal models from observational data, using techniques such as constraint-based methods (e.g., PC algorithm), score-based methods (e.g., Greedy Equivalence Search), and hybrid approaches.

- Structural Causal Models (SCMs): SCMs provide a formal framework for representing and reasoning about causal relationships, leveraging concepts from graphical models, structural equation modeling, and causal inference. Advances in SCM learning and inference algorithms have enabled their application in various domains.

- Counterfactual Reasoning Frameworks: Approaches like potential outcomes frameworks (e.g., Rubin's causal model) and structural equation models with counterfactual reasoning capabilities have been developed to enable AI systems to reason about alternative scenarios and interventions.

- Uncertainty-Aware Learning: Techniques such as Bayesian deep learning, probabilistic programming, and uncertainty estimation methods aim to incorporate uncertainty into the learning and prediction processes of AI models, improving their ability to reason under uncertainty and quantify their confidence in predictions.

Applications and Real-World Impact:

The ability to perform effective causal reasoning and reasoning under uncertainty has numerous applications across various domains, with the potential to drive significant real-world impact:

1. Healthcare and Biomedical Sciences:

- Understanding causal mechanisms of diseases and biological processes

- Identifying risk factors and developing targeted interventions

- Personalized medicine and treatment optimization

- Causal discovery from electronic health records and observational data

2. Scientific Discovery and Experimental Design:

- Automating hypothesis generation and experiment design

- Identifying causal factors in complex natural phenomena

- Accelerating scientific breakthroughs through causal reasoning

3. Decision-Making and Policy Evaluation:

- Assessing the causal impact of interventions and policy decisions

- Counterfactual reasoning for decision support and risk assessment

- Robust decision-making under uncertainty in domains like finance, economics, and public policy

4. Robotics and Autonomous Systems:

- Causal reasoning for task planning and decision-making in uncertain environments

- Counterfactual reasoning for safe exploration and failure recovery

- Adapting to changing conditions and transferring causal knowledge across domains

5. Fairness and Bias Mitigation:

- Identifying and mitigating causal pathways leading to biased decisions

- Counterfactual reasoning for fairness evaluation and debiasing

6. Explainable AI and Human-AI Collaboration:

- Providing causal explanations for AI decisions and predictions

- Enabling human-AI collaboration through interpretable causal models

- Building trust and accountability in AI systems

Despite the significant challenges, advances in causal reasoning and reasoning under uncertainty have the potential to push the boundaries of AI beyond narrow, specialized tasks and towards more robust and generalizable intelligence.

However, it is crucial to address the ethical and societal implications of these technologies, such as ensuring fairness, privacy, and accountability, and mitigating potential negative impacts or unintended consequences.

Future Directions and Conclusion:

The pursuit of AI systems capable of causal reasoning and reasoning under uncertainty is a long-term endeavor that will require sustained research efforts and interdisciplinary collaborations. Here are some future directions and considerations:

1. Hybrid Approaches and Neuro-Symbolic Integration:

Combining the strengths of neural networks and symbolic reasoning approaches through neuro-symbolic integration may unlock new avenues for causal reasoning and uncertainty handling. Leveraging the pattern recognition capabilities of deep learning with the interpretability and compositionality of symbolic systems could lead to more robust and transparent AI models.

2. Multimodal Causal Reasoning:

Developing AI systems that can reason about causal relationships across multiple modalities, such as vision, language, and physical interactions, is an important challenge. This will enable AI to better understand and interact with the rich, multimodal environments encountered in the real world.

3. Scalable and Efficient Inference:

As causal models and reasoning frameworks become more complex, scalable and efficient inference algorithms will be crucial for practical applications. This may involve leveraging advances in parallel computing, approximate inference techniques, and specialized hardware accelerators.

4. Causal Representation Learning:

Exploring methods for learning expressive and disentangled representations of causal variables and mechanisms directly from data could pave the way for more data-efficient and transferable causal reasoning capabilities.

5. Human-AI Collaboration and Interactive Learning:

Combining human expertise and domain knowledge with AI-driven causal discovery and reasoning could lead to more robust and interpretable models. Interactive learning approaches that facilitate human-AI collaboration and knowledge exchange may be key to advancing causal reasoning capabilities.

6. Causal Benchmarks and Evaluation Frameworks:

Developing comprehensive benchmarks and evaluation frameworks for causal reasoning and reasoning under uncertainty is essential for measuring progress, comparing different approaches, and identifying remaining challenges.

While significant challenges lie ahead, the pursuit of AI systems capable of robust causal reasoning and reasoning under uncertainty holds the promise of unlocking a new era of intelligent systems that can truly understand and interact with the complexities of the real world. By addressing these fundamental aspects of intelligence, AI has the potential to drive scientific discovery, enable more informed decision-making, and foster deeper human-AI collaboration across various domains.

Ahmed Banafa is an expert in new tech with appearances on ABC, NBC , CBS, FOX TV and radio stations. He served as a professor, academic advisor and coordinator at well-known American universities and colleges. His researches are featured on Forbes, MIT Technology Review, ComputerWorld and Techonomy. He published over 100 articles about the internet of things, blockchain, artificial intelligence, cloud computing and big data. His research papers are used in many patents, numerous thesis and conferences. He is also a guest speaker at international technology conferences. He is the recipient of several awards, including Distinguished Tenured Staff Award, Instructor of the year and Certificate of Honor from the City and County of San Francisco. Ahmed studied cyber security at Harvard University. He is the author of the book: Secure and Smart Internet of Things Using Blockchain and AI.

Leave your comments

Post comment as a guest