Comments

- No comments found

The pandemic turned our world upside down.

Many countries, major technology companies and start-up businesses face a global challenge of accelerating their digital transformation and harnessing the power of artificial intelligence (AI) to overcome the pandemic.

At its conception, AI researchers attempted to teach, educate, instruct, or program by explicitly coding symbolic knowledge about the world into machines. Today’s AI systems inductively “learn” from selected training data, as from experience, observations and trial and error, as if to acquire knowledge on their own, and this is known as machine learning.

Standard machine and deep learning algorithms extract data patterns as statistical correlations from raw data, structured or unstructured. This is true for simple algorithms, like logistic regression, or sophisticated algorithms, like neural networks, which can learn deeper patterns from input data.

But there is a big BUT, which is a fatal flaw in the whole enterprise. Today's AI is largely machine learning techniques, deep learning algorithms and deep neural networks can’t identify causality, its elements and structures, processes and mechanisms, rules and relationships, data and models, all what makes our world.

This leads to all sorts of decision and prediction errors, data and algorithmic biases, the lack of quality data, and implementation failings, or the absence of real machine intelligence and learning.

Integrating the symbolic AI with the statistical AI of Machine Learning, Causal Machine Intelligence and Learning makes the next generation of powerful intelligent machines, running the master [causal] learning algorithms. It allows intelligent machines to think about the world, factual and counterfactual, computing its alternatives and scenarios, and effectively interacting with any complex environments, physical or virtual.

As to Forbes' exposition, nine out of every ten machine learning projects in industry never go beyond an experimental phase and into production. "It turns out there’s a fatal flaw in most companies’ approach to machine learning, the analytical tool of the future: 87% of projects do not get past the experiment phase and so never make it into production.

Why do so many companies, presumably on the basis of rational decisions, limit themselves simply to exploring the potential of machine learning, and even after undertaking large investments, hiring data scientists and investing resources, time and money, fail to take things to the next level?"

There are two big reasons, educational and fundamental. In any ML/DL/AI courses, you’ll be told to learn to program and relearn statistics as though things were starting from scratch, and there were no a multitude of analytics tools, whether it’s the extremely popular course by Andrew Ng, “Machine learning for average humans”, “Absolute beginning into machine learning,” etc.

Now a fundamental reason is causality and causation, causal relationships among causal variables, or complex causal interrelationships instead of confounded spurious correlations. Today's machine learning algorithms are incapable of identifying causal patterns as they can only see correlations which lack any reality or deep sense.

There is an increasing number of works trying to show why Causal AI, a new category of machine intelligence, is the solution. Now, Causal AI is announced as a new category of machine intelligence that understands cause and effect. Leading ML/DL/AI researchers agree that causality is the future of AI. “Many of us think that we are missing the basic ingredients needed [for true machine intelligence], such as the ability to understand causal relationships in data”, says Yoshua Bengio, a leading figure in DL research.

Most of them do the same principal mistakes, apply just a linear asymmetrical causality in deterministic or statistical fashions. It commonly suggests that "the cause is greater than the effect". Then, in reality, small events cause large effects due to the nonlinearity and cyclicity of causation, like the triggering of large amounts of potential energy, as in a nuclear bomb. Here is a standard obsolete monotonous argumentation of causal fallacy, false cause, or non causa pro causa: "correlation does not imply causation" vs. "correlation implies causation".

“Correlation is not causation”. Correlations are symmetrical (if x correlates with y then y correlates with x), they lack direction and they’re quantitative (the “Pearson correlation coefficient”, a standard measure of correlation, is a single number between -1 and 1). In contrast, causes are asymmetrical (if x causes y then y is not a cause of x), directional and qualitative. So correlation and causation are different concepts. Furthermore, causes can’t be reduced to correlations, or to any other statistical relationship. Causality requires a model of the environment. We know this largely thanks to Turing Award-winning research by AI pioneer Judea Pearl”. [CausalLens’ blog].

In fact, real things are quite the opposite. “Correlation implies or suggests causation”. Correlations are symmetrical (if x correlates with y then y correlates with x), as well as causes are symmetrical (if x causes y then y is a cause of x). So, correlation and causation are similar concepts, and therefore causes intuitively to be reduced to correlations, or to any other statistical relationship. Where there is causation, there is correlation, but also a production or generation from cause to effect, or vice versa, a plausible mechanism, and sometimes common and intermediate causes. Correlation is often used when discovering causation because it is a necessary condition, it is not a sufficient condition, which is tested by experimentation, as RCTs, or observational studies.

The meta-rule is this: there are common reasons or root causes, (deep, basic, fundamental, underlying, initial or original), call it confounders or whatever else, and one needs to look for them in the first place. It is like the monetary or fiscal policy regimes are root causes for historical negative correlations between inflation and unemployment, which have their own root causes.

Overall, there an increasing number of R&D of linear specific/inductive/bottom-up/space-time causality models created in the narrow context of a statistical Narrow AI and ML, as sampled below:

Due to the narrow causal AI assumptions, such causal AI models among other principal things, as generalization and transfer, lack a much deeper research, as it was noted in the article, Towards Causal Representation Learning, namely:

a) Learning Non-Linear Causal Relations at Scale (1) understanding under which conditions nonlinear causal relations can be learned; (2) which training frameworks allow to best exploit the scalability of machine learning approaches; and (3) providing compelling evidence on the advantages over (noncausal) statistical representations in terms of generalization, repurposing, and transfer of causal modules on real-world tasks. b) Learning Causal Variables. causal representation learning, the discovery of high-level causal variables from low-level observations; c) Understanding the Biases of Existing Deep Learning Approaches; d) Learning Causally Correct Models of the World and the Agent. Building a causal description for both a model of the agent and the environment (world models) for robust and versatile model-based reinforcement learning.

AI models that could capture causal relationships will be really intelligent and generalizable, unlike ML systems excelling in connecting input data and output predictions, while lacking in reasoning about cause-effect relations or environment changes.

The most advanced part of ML, Deep Learning (DL), has focused too much on correlation without causation, finding statistical patterns in terms of training data, but failing to explain how they’re connected. The majority of ML/DL successes reduce large scale pattern recognition on the collected independent and identically distributed (i.i.d.) data.

Causal knowledge and learning are about how intelligent entities think, talk, learn, explain, and understand the world in causal terms, in terms of causes and effects, agents, changes or processes, actions and manipulation.

It is about transfer learning and causal discovery, i.e., learning causal information from the real world’s data, from heterogeneous data when the i.i.d. assumption is dropped.

The critical role of causality, causal models, and intervention is evidenced in in the basic cognitive functions: reasoning, judgment, categorization, deductive or inductive inference, language, and learning, and decision making,

Causal learning the cause–effect relationships, as determining the causation among a set of two or more events or discovering the causality in data, could be viewed in various ways:

Causal learning of four causes:

Causal learning is the process by which people and animals gradually learn to predict the most probable effect for a given cause and to attribute the most probable cause for the events in their environment. Learning causal relationships between the events in our environment and between our own behavior and those events is critical for survival.

Learning causal relationships can be characterized as a bottom-up process whereby events that share contingencies become causally related, and/or a top-down process whereby cause–effect relationships may be inferred from observation and empirically tested for its accuracy.

Causal learning underpins the development of our concepts and categories, our intuitive theories, and our capacities for planning, imagination, and inference.

All the causal knowledge confusion comes from its defective linear definition, as exposed in the Wiki Article, Causality:

Causality: influence by which one event, process or state, a cause, contributes to the production of another event, process or state, an effect, where the cause is partly responsible for the effect, and the effect is partly dependent on the cause.

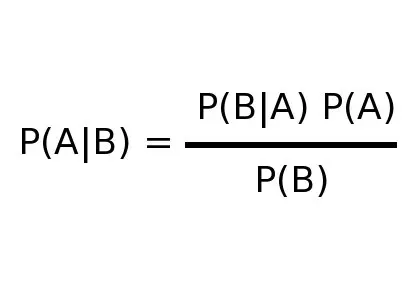

This is all marked by a giant causal fallacy of ignoring the whole world of reverse causality. On an intuitive level, the factorization of the joint distribution P(Cause, Effect) into P(Cause) x P(Effect | Cause) is identical with the factorization into P(Effect) x P(Cause | Effect). It is formulated as Bayes' theorem (Bayes' law or Bayes' rule), making the basis for Bayes inference, statistics and deep machine learning algorithms.

A blue neon sign showing the Bayes's theorem

Such a misleading linear approach leads to systematic biases and errors and is reflected in too many studies on causal learning, discovery, inference, modelling, reasoning, etc., including 3 levels of causality, as Association, Intervention, and Counterfactuals (J. Pearl).

In reality, causality and causation are the most complex phenomena in the world, as it is evidenced by the AA ladder of CausalWorld, as in the AA ladder of CausalWorld:

The Six Layer Causal Hierarchy defines the Ladder of Reality, Causality and Mentality, Science and Technology, Human Intelligence and Non-Human Intelligence (MI or AI).

The CausalWorld [levels of causation] is a basis for all real world constructs, as power, force and interactions, agents and substances, states and conditions and situations, events, actions and changes, processes and relations; causality and causation, causal models, causal systems, causal processes, causal mechanisms, causal patterns, causal data or information, causal codes, programs, algorithms, causal analysis, causal reasoning, causal inference, or causal graphs (path diagrams, causal Bayesian networks or DAGs).

Overall, the Causal Machine Intelligence and Learning involves the deep learning cycle of World [Environments, Domains, Situations], Data [Perception, Percepts, Sensors], Information [Cognition, Memory], Knowledge [Learning, Thinking, Reasoning], Wisdom [Learning, Understanding, Decision], Interaction [Action, Behavior, Actuation, Adaptation, Change], and new World…

Orders are everywhere in the world, be it hierarchies or categorization. They are studied by mathematics, in order theory, as special types of n-ary relations.

[Causal] Orders are special binary relations of events/changes, which are both symmetric and antisymmetric, or reflexive, relating every element to itself.

Suppose that P is a set of events and that ≤ is a causal relation on P. Then ≤ is a partial causal order if it is reflexive, antisymmetric, and transitive, that is, if for all a, b and c in P, we have that:

a ≤ a (reflexivity)

if a ≤ b and b ≤ a then a = b (antisymmetry)

if a = b is true then b = a is also true (symmetry)

if a ≤ b and b ≤ c then a ≤ c (transitivity).

There are always the converse, dual relation, or transpose, of a causal binary relation, as the opposite or dual of the original relation, or the inverse of the original relation, or the reciprocal of the original causal relation.

There are special types of causal order, such as partial orders, linear orders, total orders, or chains. While many familiar orders are linear, real orders are nonlinear, as a cyclic causal order, a way to arrange a set of things/objects/events in a circle.

A cyclic causal order on a set of changes X with n variables or elements is like an arrangement of X on a clock face, for an n-hour clock. Each element x in X has a "next element" and a "previous element", and taking either successors or predecessors cycles exactly once through the elements as x(1), x(2), ..., x(n).

The general definition is as follows: a cyclic causal order on a set X is a relation C ⊂ X, written [a, b, c], that satisfies the following axioms:

And cycles overrule linear orders. Given a cyclically ordered set (K, [ ]) and a linearly ordered set (L, <), the (total) lexicographic product is a cyclic order on the product set K × L, defined by [(a, x), (b, y), (c, z)] if one of the following holds:

The lexicographic product K × L globally looks like K and locally looks like L; it can be thought of as K copies of L. This construction is sometimes used to characterize cyclically ordered groups.

All in all, for any two correlated events, changes or variables, x and y, all ther possible relationships include:

The cyclical causation goes as feedback, which “occurs when outputs of a system are routed back as inputs as part of a chain of cause-and-effect that forms a circuit or loop”. In a feedback loop all outputs of a process are available as causal inputs to the process. There is a positive or negative feedback, named as self-reinforcing/self-correcting, reinforcing/balancing, discrepancy-enhancing/discrepancy-reducing or regenerative/degenerative, respectively. Besides, any positive feedback could be a virtuous or vicious cycle, depending on its interaction effects, constructive or destructive.

Feedback is used extensively in physical, chemical, biological, engineering, or digital systems. Feedback can give rise to incredibly complex behaviors, as biological systems contain many types of regulatory circuits, both positive and negative.

Causal feedback systems, mechanisms, loops, and interactions are involved in any complex behavior of any piece of reality, physical, biological, mental, economic, political, ecologic, social, virtual or digital.

Here is an illustrative case study of the most challenging macroeconomic problems, a business cycle recession and recovery.

A recession is a specific sort of causal vicious cycle, with cascading declines in output, employment, income, and sales that feed back into a further fall in output, spreading rapidly from industry to industry, country to country and region to region.

On the opposite side, a business cycle recovery begins when that recessionary vicious cycle reverses its causation and effects and becomes a virtuous cycle, with rising output triggering job gains, rising incomes, and increasing sales that feed back into a further rise in output. The recovery can persist and result in a sustained economic expansion only if it becomes self-feeding, which is ensured by this domino effect driving the diffusion of the revival across the economy.

How the RAI is to Measure Recovery/Recession Business Cycles

The severity of a recession is measured by the three D's: depth, diffusion, and duration. A recession's depth is determined by the magnitude of the peak-to-trough decline in the broad measures of output, employment, income, and sales. Its diffusion is measured by the extent of its spread across economic activities, industries, and geographical regions. Its duration is determined by the time interval between the peak and the trough.

In analogous fashion, the strength of an expansion is determined by how pronounced, pervasive, and persistent it turns out to be. These three P's correspond to the three D's of recession.

Now, let us see how this all could impact in the real life context.

Five top-performing tech stocks in the market, namely, Facebook, Amazon, Apple, Microsoft, and Alphabet’s Google, FAAMG, represent the U.S.'s Narrow AI technology leaders whose products span standard machine learning and deep learning or data analytics cloud platforms, with mobile and desktop systems, hosting services, online operations, and software products. The five FAAMG companies had a joint market capitalization of around $4.5 trillion a year ago, and now exceed $7.6 trillion, being all within the top 10 companies in the US.

As to the modest Gartner's predictions, the total FAI-derived business value is forecast to reach $3.9 trillion in 2022.

You don’t need to be a great economist to foresee that such speculative, circular and leveraged mega bubbles lead the global COVID-19 plagued economy to its deep recession and real economic collapse.

A real solution here is not a fake and false, narrow and weak, acausal AI of ML and DL, relying on blind statistics and mathematics to imitate some specific parts of human cognition or intelligent behavior.

What the pandemic-stricken world needs is the Real AI Technology which must be developed as a digital general purpose technology, like a Synergetic Cyber-Human Intelligence.

Human minds as collective intelligence and world knowledge will be integrated with the Human-Machine Intelligence and Learning (HMIL) Global Platform, or Global AI:

GAI = HMIL = AI + ML + DL + NLU + 6G+ Bio-, Nano-, Cognitive engineering + Robotics + SC, QC + the Internet of Everything + Human Minds + MME, BCE + Digital Superintelligence = Encyclopedic Intelligence = Real AI = Global AI = Global Cyber-Human Supermind

Again, the 4th Industrial Revolution (4IR) as a fusion of advances in artificial intelligence (AI), robotics, the Internet of Things (IoT), genetic engineering, quantum computing, and other digital technologies transforms human economy into machine economy.

A human-like AI/ML/DL technology might rapidly make entire industries obsolete, in either case triggering widespread mass unemployment, while over-enriching the FAI Big Tech.

Leave your comments

Post comment as a guest